Abstract:

Throughout history, movies and games have had different expectations of them. Movies are expected to have gorgeous visual effects, and graphics are expected to look picture perfect. On the other hand, games have always been expected to have smooth, consistent gameplay. Recent advancements in the development of real-time rendering techniques have opened the possibility of games catching up their movie counterparts in graphical quality.

Introduction

Video games have made leaps and bounds over the last couple of decades, especially in the graphics department. Back in the 1990s, the video game industry was transitioning from 2D games to 3D computer games. In 1997, Final Fantasy 7 (FF7) was released as the first 3D game of that franchise. Cloud Strife, the main character of FF7, looked like a chunky block of pixels that barely resembled his concept art. Keep in mind, FF7’s graphics were considered revolutionary for the time, with IGN reviewer Jay Boor saying that “FF7’s graphics are light years ahead of anything seen on the PlayStation” [1]. It was even nominated for an Outstanding Achievement in Graphics at the Academy of Interactive Arts & Sciences annual Interactive Achievement Awards [2]. Now, in the long-awaited 2020 FF7 Remake (FF7R), Cloud Strife looks like he could very well exist in real life in terms of graphics fidelity. The city of Midgard, where FF7R is set, could probably exist in some alternate steampunk universe. Despite all these advancements, video games still lack in graphical fidelity when compared to computer-animated films. In 1997, Pixar, the award-winning animation studio now owned by Disney, was already creating films such as A Bug’s Life, in which the characters looked miles better than the block of pixels that was supposed to resemble Cloud. Pixar has since continued to make graphical improvements, evident in their recent films such as Incredibles 2 and Coco. However, it must be noted that the gap in graphical quality is much smaller than it used to be. This begs the question: will we ever get to the point where we can say that games match films in this area? The answer is probably not 100%, but they could possibly come close enough where the differences are negligible. Historically, the key difference between the two are the different rendering techniques used for each medium.

Figure 1: Comparison of Cloud in Final Fantasy 7 (1997) and Final Fantasy 7 Remake (2020) [15]

The Basis of Rendering

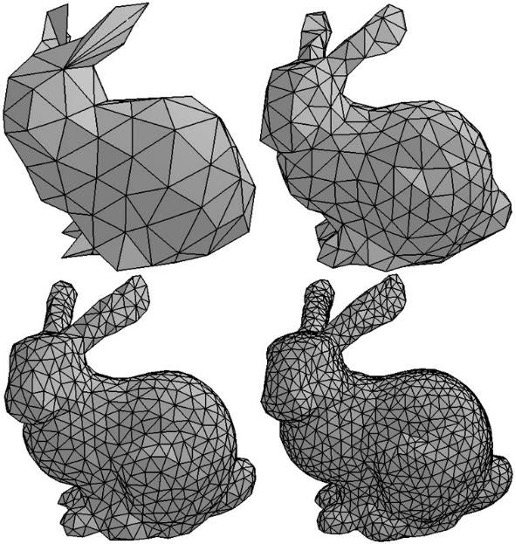

To start, it is important to know how a 3D scene is generally constructed. A 2D scene is simple enough to think about. Monitors and other types of displays are already 2D planes so a 2D world easily translates to fit on a screen. However, imagining how to project a 3D world onto a screen is a bit more complex. For example, how do you tell a computer to draw an object, otherwise known as a model, onto the screen? The answer is to represent everything in triangles, like in Figure 2.

Figure 2: A bunny at varying levels of detail represented in triangles

Available: https://cathyatseneca.gitbooks.io/3d-modelling-for-programmers/content/3ds_max_basics/3d_representation.html

If you think about it, any model has many different points on its surface. To represent a point in 3D space with X, Y, and Z axes you would need three different components to represent it in each axis respectively. Triangles are the easiest polygons to use in 3D space because three vertices are guaranteed to be coplanar. Any shape that consists of more than three vertices can be broken down into several triangles [3]. Representing objects as a composition of triangles does make them lose some of their real-life detail, which is why artists tend to strive for the maximum number of triangles to fit within technical constraints. The idea of using triangles to form models is the basis of rendering.

Having models is not the end of the graphics pipeline for a scene. There must also be lighting, and instructions for how models should appear in the scene. These instructions could include what textures or images to use, different colors to implement, and different lighting interactions to include. Much of this is encompassed in what we call shading [4]. There are many ways to go about shading a scene, and these varying methods are the reason why real-time rendering in games differs from the process of offline rendering. The most important requirement for a game is for it to run smoothly at a minimum number of frames per second (FPS). If the game feels choppy, no one will enjoy playing. This restriction severely limits games in terms of computation power and time because each frame must be processed in milliseconds. It is akin to watching a movie downloaded onto a computer versus streaming a movie from Netflix. Once downloaded, the computer just has to run it in whatever quality provided, while streaming quality is usually capped at a lower quality to reduce the risk of dropping frames due to shoddy internet or other issues. For offline renders, they can take as long as needed to finish before the release date. For example, one frame from Pixar’s Inside Out can take 33 hours to render, so the film would technically need over 400 years to finish rendering completely. To work their way around this, Pixar owns a render farm that consists of over 2000 machines to help render the film in a reasonable amount of time, which is unrealistic for a game [5]. Therefore, rendering techniques for games must take some shortcuts.

Figure 3: A frame from Pixar’s Inside Out (2015) that took 33 hours to render [5]

Real-Time Rendering

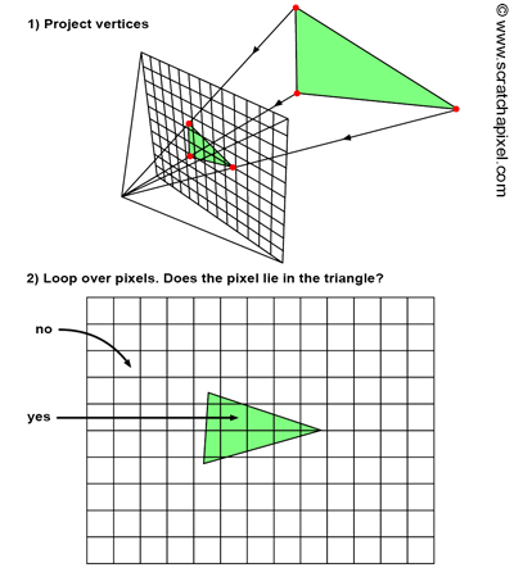

Figure 4: Representation of the rasterization process [6]

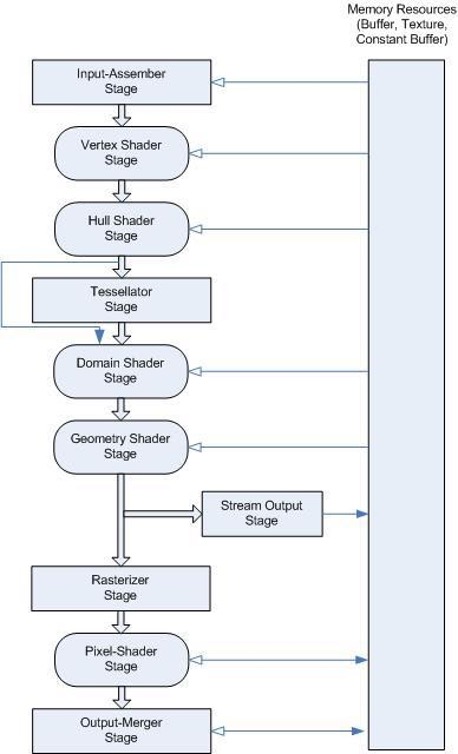

In real-time rendering for games, the technique relies on approximating triangles into pixels, a process known as triangle rasterization. To produce a recognizable scene, the computer must determine the color of each individual pixel, each represented as a square in the 2D grid of Figure 4. The process for rasterization is object-centric because it starts by sending all the objects and their triangles through a transformation pipeline to convert from 3D space to a 2D screen space [6]. Then, the process determines which pixels lie inside what triangle. DirectX11 is a popular graphics API that interfaces with the GPU, and Figure 5 represents the graphics pipeline that produces the final image. The vertex shader stage is where the projection onto a 2D space occurs. The rasterization stage is where the 2D triangles are then ‘converted’ to pixels. Finally, at the pixel-shader stage, the pixels are assigned colors based on data from their world and a variety of different lighting algorithms [7]. One of the most basic lighting models is known as the Phong Reflection Model. To calculate the final look of an object, the lighting model only needs data from the object, the direction of the camera, and information about the light source [8]. The immediate problem with this is that in a realistic world, lighting does not always shine in a single direction. It can be reflected, refracted, absorbed, and more [9]. Light rays can bounce, and rasterization has trouble handling the amount of calculations needed to follow all the light rays. There are ways around this to simulate realistic lighting and shadows, which can involve the use of static lighting or pre-calculating the lighting, so the most expensive parts are not done real-time. As a result, games can still look somewhat photorealistic while saving on computational cost. Therefore, rasterization’s main advantage is the speed of approximating lighting based on object information.

Figure 5: DirectX11’s graphics pipeline [7]

Offline Rendering

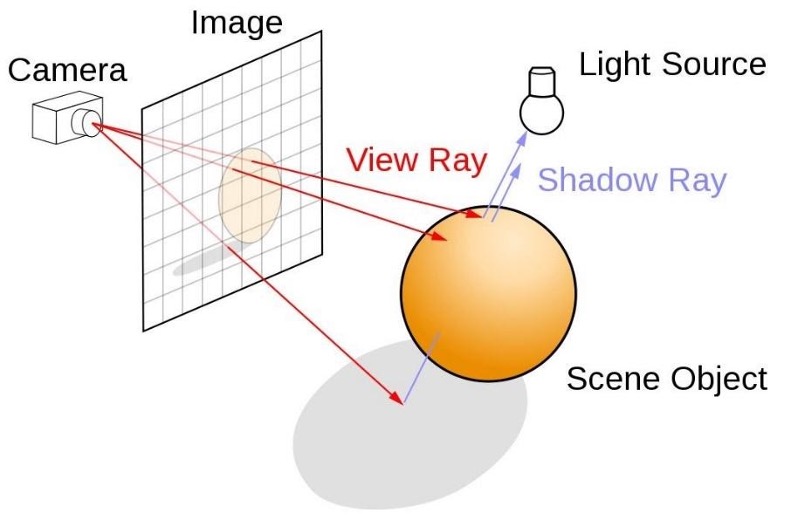

Figure 6: Representation of the ray tracing process. Available: https://developer.nvidia.com/discover/ray-tracing. [Accessed: 05-Jun-2020].

In offline rendering, the primary technique is known as ray tracing. As the name implies, this method concentrates on following rays of light throughout the scene to determine what to display. In the real world, light sources emit light rays, so it might seem logical to follow these rays from the source to the viewer, the camera in this case. However, many of the rays emitted will never reach the camera, so it is much more efficient to do it the other way around. Light rays are emitted from the camera through each pixel on the screen, and objects can be checked against the ray for an intersection [10]. If there is a hit, info at the impact point in the 3D world can contribute to the final color of the pixel [11]. Ray tracing is considered image centric because it iterates through each pixel to find object intersections rather than iterating through objects and determining what pixels it corresponds to [3]. This gives ray tracing a distinct advantage in the shading department because lighting does not just dissipate after reaching a surface. Different materials interact differently with light, and with ray tracing the ray can be followed through multiple bounces off of different objects that can all contribute to the final color and produce more photorealistic scenes [11]. However, this comes at a higher computational cost compared to rasterization. Each bounce of a ray can produce many more rays that have to be tracked, stacking up the cost of rendering.

Conclusion

While mass adoption has yet to be achieved in terms of ray tracing or even hybrid rendering, every year it becomes more and more accessible. In the future, e video games might eventually match the graphic fidelity found in animated films. Perhaps even Mario himself will look like he just jumped out from a Pixar film. The implication of real-time ray tracing reaches farther than just games. Medical facilities will be able to produce real time photorealistic organ visualizations and x-rays for better treatment and diagnosis. Even movies can benefit by having production designers use AR to visualize set design before production takes place [16]. The future looks bright for video games and other 3D related fields.

Figure 7: Image from Epic Games’ Unreal Engine 5 tech demo [14]

Figure 7: Image from Epic Games’ Unreal Engine 5 tech demo [14]

Reference

[1] J. Boor, “Final Fantasy VII,” IGN, 03-Sep-1997. [Online].

Available:. [Accessed: 04-Jun-2020].

[2] “1998 Interactive Achievement Awards,” 1998 1st Annual Interactive Achievement Awards. [Online]. Available: https://www.interactive.org/awards/1998_1st_awards.asp. [Accessed: 04-Jun-2020].

[3] Scratchapixel, “Rendering an Image of a 3D Scene: an Overview,” Scratch-A-Pixel, 12-Nov-2014. [Online]. Available: https://www.scratchapixel.com/lessons/3d-basic-rendering/rendering-3d-scene-overview/rendering-3d-scene. [Accessed: 05-Jun-2020].

[4]. D. Hemmendinger, “Shading and texturing,” Encyclopædia Britannica, 01-Aug-2014. [Online]. Available: https://www.britannica.com/topic/computer-graphics/Shading-and-texturing. [Accessed: 05-Jun-2020].

[5] P. Collingridge, “Rendering,” The Science Behind Pixar. [Online]. Available: https://sciencebehindpixar.org/pipeline/rendering. [Accessed: 05-Jun-2020].

[6] Scratchapixel, “Rasterization: a Practical Implementation,” Scratchapixel, 25-Jan-2015. [Online]. Available: https://www.scratchapixel.com/lessons/3d-basic-rendering/rasterization-practical-implementation/overview-rasterization-algorithm. [Accessed: 05-Jun-2020].

[7] M. Jacobs, “Graphics Pipeline,” DirectX11 Documentation, 31-May-2018. [Online]. Available: https://docs.microsoft.com/en-us/windows/win32/direct3d11/overviews-direct3d-11-graphics-pipeline. [Accessed: 06-Jun-2020].

[8] B. T. Phong, “Illumination for computer generated pictures,” Communications of the ACM, vol. 18, no. 6, pp. 311–317, Jun. 1975.

[9] The Editors of Encyclopaedia Britannica, “Wave,” Encyclopædia Britannica, 22-Dec-2016. [Online]. Available: https://www.britannica.com/science/wave-physics. [Accessed: 05-Jun-2020].

[10] A. Appel, “Some techniques for shading machine renderings of solids,” Proceedings of the April 30–May 2, 1968, spring joint computer conference on – AFIPS ’68 (Spring), pp. 37–45, Apr. 1968.

[11] T. Whitted, “An improved illumination model for shaded display,” Communications of the ACM, vol. 23, no. 6, pp. 343–349, Jun. 1980.

[12] Nvidia, “10 Years in the Making: NVIDIA Brings Real-Time Ray Tracing to Gamers with GeForce RTX,” NVIDIA Newsroom , 26-May-2020. [Online]. Available: https://nvidianews.nvidia.com/news/10-years-in-the-making-nvidia-brings-real-time-ray-tracing-to-gamers-with-geforce-rtx. [Accessed: 05-Jun-2020].

[13] N. Wasson and E. Frederiksen, “Nvidia lists games that will support Turing cards’ hardware capabilities,” The Tech Report, 21-Aug-2018. [Online]. Available: https://techreport.com/news/34019/nvidia-lists-games-that-will-support-turing-cards-hardware-capabilities/. [Accessed: 05-Jun-2020].

[14] A. Battaglia, “Inside Unreal Engine 5: how Epic delivers its generational leap,” Eurogamer.net, 15-May-2020. [Online]. Available: https://www.eurogamer.net/articles/digitalfoundry-2020-unreal-engine-5-playstation-5-tech-demo-analysis. [Accessed: 05-Jun-2020].

[15] D. Light, Cloud Strife. 2019.

[16] B. Kubala, “Ray Tracing Goes Beyond Gaming,” Computer Rental Blog, 13-Feb-2020. [Online]. Available: https://blog.rentacomputer.com/2019/08/23/ray-tracing-goes-beyond-gaming/. [Accessed: 03-Aug-2020].