Abstract

The recent advances in artificial intelligence have given birth to a new kind of fraud: Deepfakes are media manipulated to portray a person as performing an action they did not actually perform. Their use has been primarily malicious, including political misinformation and the creation of fake celebrity porn. Deepfakes are becoming easier to generate with creation methods available online. Detection methods are failing to keep up with the virus-like evolution of creation methods, making deepfakes the ultimate fraud of artificial intelligence.

Deepfakes and Harms to Society

Imagine a world where you could copy and paste the face of your favorite celebrity onto the face of another with just two clicks of your mouse. In the best case, we would end up with Jeff Bezos and Elon Musk starring in Star Trek as in Fig. 1.

However, in this world, your face would not be your own. People could just as easily paste your face onto a video of a person speaking of an ideology you fundamentally disagree with. Similarly, they could create fake media fooling you into thinking a political candidate said something they did not. This would be a world of constant deceit and suspicion, where fake news is the norm and the truth is scarce. Unfortunately, with the advent of deepfakes, this may soon become our reality.

Figure 1: Billionaires in Star Trek. Deepfake of Jeff Bezos and Elon Musk in Star Trek [1].

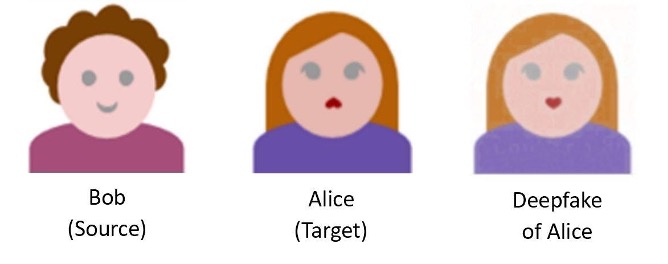

Deepfakes are media tampered with deep learning that depict a person as performing an action they have not, in reality, actually performed. In particular, most deepfakes use deep learning to take a “source” individual in an image or video and replace them with a victim or “target” individual using deep learning [2]. Fig. 2 shows an example of a deepfake created from the faces of source and target individuals.

Figure 2: Deepfake Example. Sample deepfake created from source and target faces. The deepfake keeps the style and background of the source individual’s picture but replaces her face with that of the target individual.

Deepfakes have been used primarily for malicious purposes. One of the earliest deepfake videos in 2017 swapped the face of a porn actor with that of a celebrity [3]. More recently, an app called DeepNude was released online last year which could take an image of a clothed woman and generate a nude image of her in mere seconds [4]. AI startups are also now selling deepfake images to dating apps and advertising firms that need diversity on their sites [5]. BuzzFeed released a now infamous fake video of former President Barack Obama verbalizing derogatory, profanity-ridden statements [6].

On the other hand, social media giants are pushing back against deep-fakes. In December of last year, Facebook removed 610 accounts with fake profile pictures that posted content in support of President Trump and against the Chinese government [7]. Furthermore, Facebook recently invested over $10 million in efforts to create a dataset for a deepfake detection challenge and offered $1 million in prizes [8]. In March, Twitter also began warning users before they share or like Tweets that were identified as containing synthetic or manipulated media [9].

Deepfake Creation

Today, creating a deepfake has become incredibly easy. Searching for the phrase “face swap” gives at least ten results on the Apple App Store and many more on Android’s Google Play app store. A deepfake can be created by simply uploading an image of a source and target individual to one of these applications. Under the hood, these applications use a form of artificial intelligence called deep learning to create deepfakes. In this section, we will explore how deep learning can be used for deepfake generation with the help of two cartoon faces that we will name Bob and Alice (Fig. 3). We will use Bob’s face as the source and Alice’s face as the target. The goal is to create a deepfake that looks like Alice but has the same facial expression as Bob.

Figure 3: Bob and Alice. Bob (source) and Alice (target) result in the deepfake on the right.

Two Types of Information in a Face

To better understand the process of creating a deepfake, we can divide the information in a picture of a face into two types: local information and global information. Local information refers to characteristics of a particular person. This is information that defines the style of a person and can be used to clearly distinguish between individuals. For example, imagine that you knew Bob and Alice personally. Then, if you were shown the pictures of Bob and Alice in Fig. 3 with the names hidden, you would still be able to distinguish between Bob and Alice based on Bob’s short curly hair and Alice’s long straight hair. Thus, the hairstyles of Bob and Alice are part of their faces’ respective local information.

On the other hand, global information is not specific to any one individual. This generally includes information such as facial expressions, emotions or actions being performed by an individual in an image. For example, again in Fig. 3, Bob is smiling and Alice is frowning, but it would not be unnatural if in an alternate pair of images, the facial expressions were reversed (i.e., a frowning image of Bob and a smiling image of Alice). This is the key difference between global and local information. Global information can apply to anyone while local information is specific to an individual.

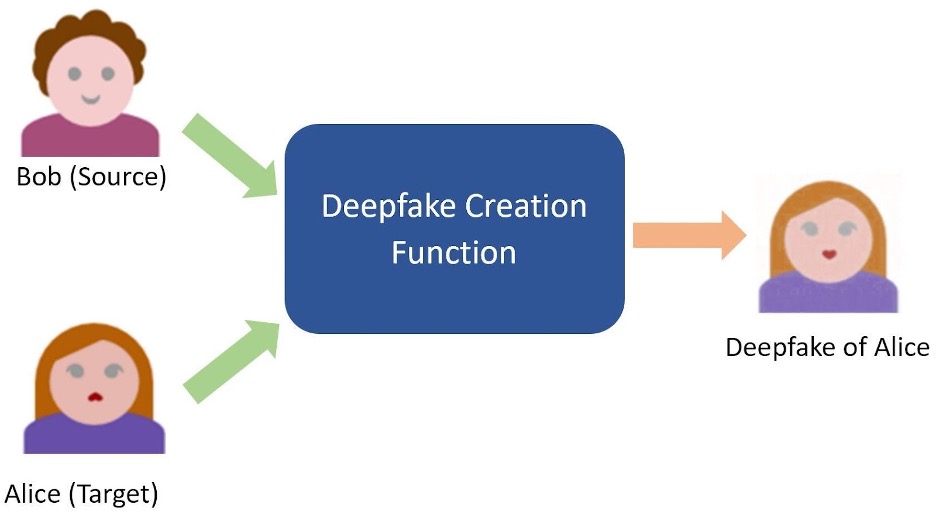

An ideal deepfake has the same style as the target but has the facial expressions and actions of the source. This is depicted in Fig 3; the deepfake looks like the target (Alice), but has the facial expression (smile) of the source (Bob). Using our classification of information types, we can rephrase the deepfake creation problem: We want a process that takes in a source and a target image to produce a deepfake that has the local information of the target but preserves the global information of the source. Such a process is naturally represented in mathematics using a function – a process that takes in inputs and transforms them into an output. Fig 4 shows our deepfake creation pipeline using this function representation. As we will see next, viewing the deepfake creation process as a function allows us to use tools from deep learning.

Figure 4: Function Representation of Deepfake Creation. We can represent deepfake creation as a function which transforms input source and target images into an output deepfake.

Using Deep Learning to Model a Function

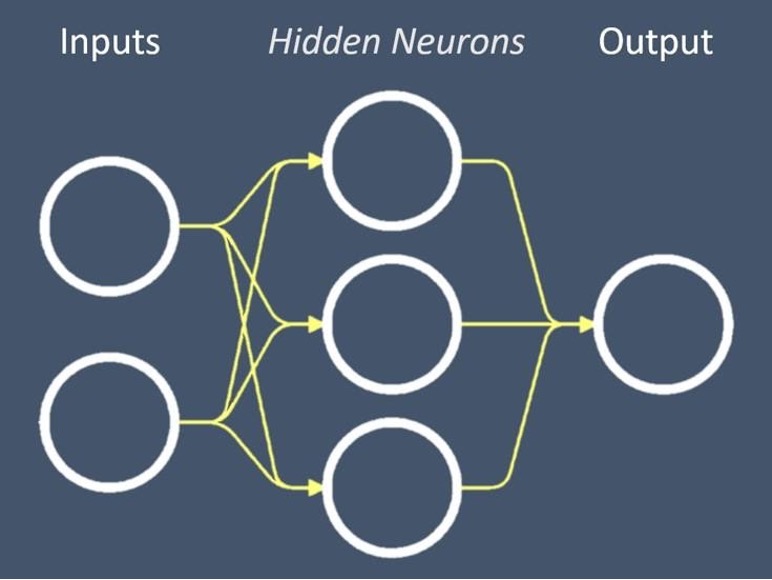

Deep learning is a subset of artificial intelligence which concerns itself with mathematical structures called artificial neural networks (or neural networks for short). While inspired by how neurons are wired together in the brain, artificial neural networks are only a crude approximation of their biological counterpart. Still, neural networks have powerful mathematical properties which have made them a very popular topic of research in the past few years. In particular, neural networks can be used to approximate the transformation that a function performs (e.g., the deepfake creation function’s transformation from source and target images to a deepfake). This process of using a neural network to represent the transformation of a function is called modeling a function. Fig. 5 shows a diagram of a neural network.

Figure 5: Diagram of a Neural Network. A neural network has inputs, hidden neurons and outputs. The hidden neurons can be tuned to represent the desired function.

Each of the circles in the diagram represents an artificial neuron (or neuron for short). There are three different types of neurons in a neural network: input, hidden and output neurons. The input neurons are where we place the input of our functions (e.g. the deepfake source and target images). The output neuron is where the output of the function is produced. For us, the output is the deepfake image. The true magic of a neural network lies within its hidden neurons. These neurons are called hidden because they lie between the input and output neurons. We can think of the hidden neurons as small units with parameters (or configurations) that can be tuned. Changing the parameters of these neurons alters the function that the neural network represents. An analogy for these units are the tuning knobs of a stringed instrument like a violin or guitar. We can tune the note a string produces by rotating its tuning knob. Similarly, we can control the output of a neural network by tweaking the parameters of its hidden units. In fact, it turns out that if we have enough hidden neurons in a neural network, we can tune its hidden neurons to approximate almost any function [10]. It is this universal function approximator property of neural networks that we will exploit to create a deepfake. We will model the deepfake creation function with a neural network called the generator.

The Generator

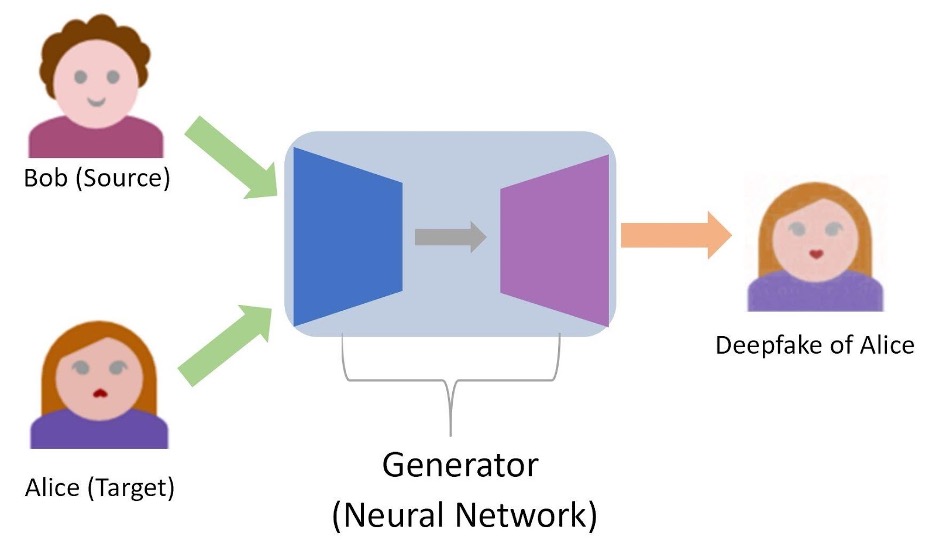

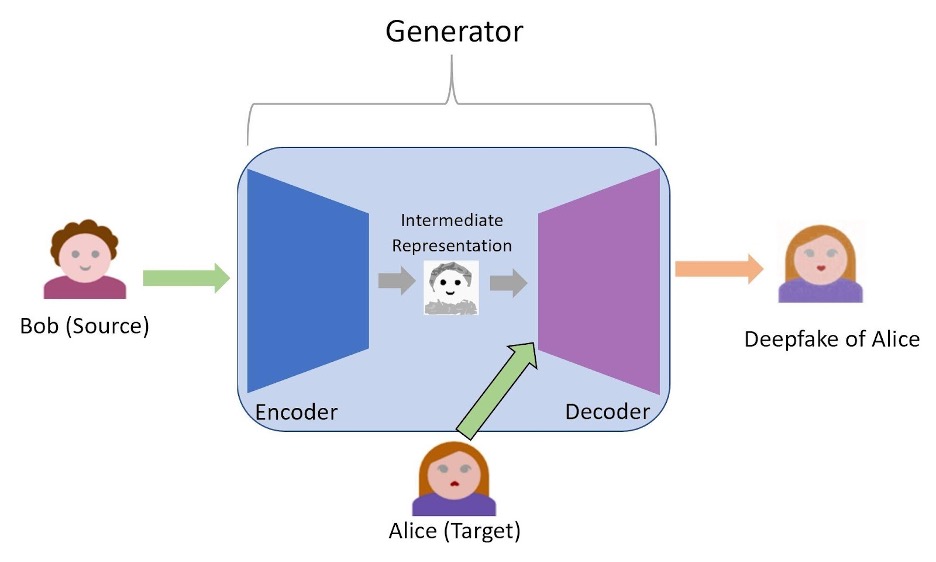

The generator is a neural network that maps the source and target images to the desired deepfake. Fig. 6 modifies the pipeline from Fig. 4 by replacing the function with the generator. You will notice that the generator looks like a horizontal hourglass. This is because the generator itself is composed of two smaller neural networks called the encoder and the decoder.

Figure 6: Neural Network Representation of Deepfake Creation. We model the function in Fig. 4 with a neural network called the generator.

The goal of the encoder is to take the source image, remove its local information and output an intermediate representation that contains only the global information of the source image. In our example, the encoder would take Bob’s image and try to output some intermediate image that has Bob’s smile but with most of Bob’s particular characteristics like his hairstyle and skin color removed. The decoder takes this intermediate output from the encoder and applies the local information of the target to produce the deepfake. So, in our example, the decoder will add the characteristics of Alice, such as her hairstyle, hair color, and lipstick to Bob’s smile outputted by the encoder. In this way, we obtain the deepfake that we sought: one that preserves the global information of the source but has the local information of the target. Fig. 7 illustrates how the encoder and decoder work together within the generator to create a deepfake.

The encoder and decoder describe the structure of the generator, but we still need a way to tune the parameters of the generator to make sure it creates a realistic-looking deepfake. A mock exam provides an analogy of how we do this. Imagine that a student takes a mock exam. The teacher then grades the exam and finds mistakes. A good student would then update his/her knowledge to avoid making the same mistake again. In this manner, the student learns. We can think of the generator as the student and the task of creating a realistic-looking deepfake as the exam. Then, what we are missing in our analogy is the teacher that evaluates the work of the generator. We use yet another neural network called the discriminator to act as the teacher.

Figure 7: Zoomed-in View of the Generator. The encoder and decoder work together within the generator to create a deepfake.

The Discriminator

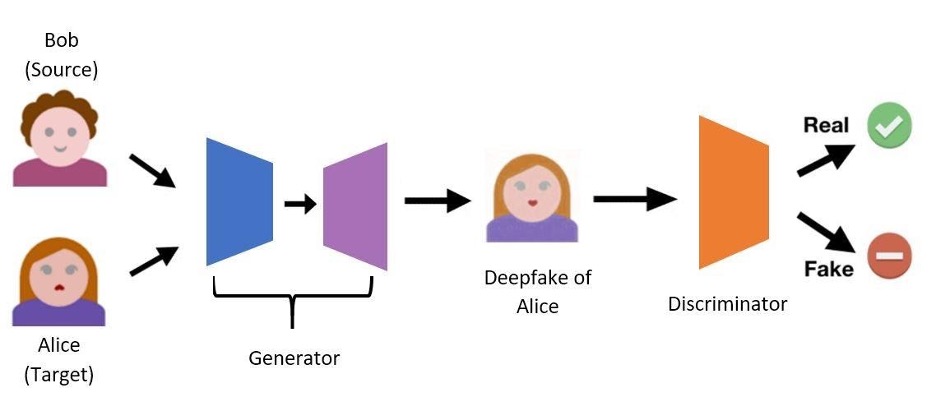

The discriminator is the final component required in the deepfake creation process and is used to improve the quality of the deepfakes created. The job of the discriminator is to take an input image and evaluate whether it looks real or fake. Fig. 8 shows how the discriminator fits in with the generator. If the generator excels at its task, the discriminator will think that the generated deepfake is a real image. If on the other hand, the discriminator thinks that the created deepfake looks fake, the generator will try to learn from this and update its parameters. The discriminator is only needed while the generator is still learning. Once the generator has improved the quality of its deepfakes to the point where the discriminator always thinks they are real, we can get rid of the discriminator and simply use the generator to create the deepfakes.

Figure 8: The Discriminator. The discriminator’s task is to evaluate whether an input image looks real or fake.

Deepfake Perfection through Neural Network Competition

Another view of the relation between the generator and discriminator is one of competition. In fact, the most successful framework for tuning the generator’s parameters pits the generator against the discriminator in a contest to get better. This framework is called generative adversarial networks (GANs) since the generator and discriminator act as adversaries or opponents in a competition that leads to the generation of a deepfake. The author of the GAN framework, Ian Goodfellow, gives an analogy:

The generative model can be thought of as analogous to a team of counterfeiters, trying to produce fake currency and use it without detection, while the discriminative model is analogous to the police, trying to detect the counterfeit currency. Competition in this game drives both teams to improve their methods until the counterfeits are indistinguishable from the genuine articles. [11]

Then, for deepfake creation, the generator tries to generate the deepfakes to look as realistic as possible in order to fool the discriminator. The discriminator learns from the generated fake images and improves its ability to discriminate between the real and fake images. As the discriminator gets better, the generator is forced to improve the quality of its fakes even further in order to fool the improved discriminator. This cycle of competitive learning is repeated until the fakes are indistinguishable from the real images and the discriminator’s best strategy is random guessing, a state game theorists call nash equilibrium.

Combatting Deepfakes: A Game of Cat and Mouse

The above competitive framework for creating deepfakes mirrors a more general cat-and-mouse game between researchers trying to detect deepfakes and hackers trying to evade these detectors. A deepfake detector is similar to a discriminator in that it is a neural network that tries to distinguish between real images and deepfakes. However, there is an important difference in goal between the two. Only the hacker has access to the discriminator and uses it to teach the generator to create deepfakes that look more realistic. On the other hand, a detector is used as a security measure to identify deepfakes. For example, social media giants like Facebook and Twitter are actively trying to remove or flag deepfakes using detectors. Such detectors are able to achieve over 98% accuracy for detecting deepfakes created using the method we discussed in the previous section [12].

While this may seem great at first glance, hackers have since repeatedly modified this deepfake creation method further to evade detectors. Just as how in the GAN framework, an improvement in the discriminator leads to improved performance for the generator, improvements in detection methods are also leading to more intelligent creation methods. This ever-evolving nature of deepfakes has earned the contempt of AI researchers: According to Bart Kosko, Professor of Electrical Engineering and Law at USC, “Deepfakes are the coronavirus of machine learning”[13].

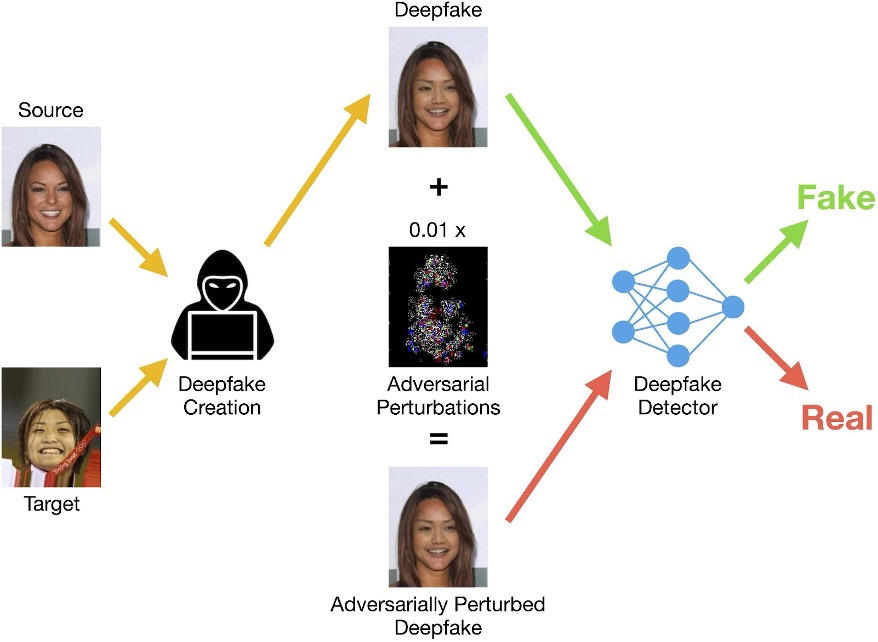

The most invasive form of deepfakes results from modifications called adversarial perturbations – tiny, strategically chosen changes to the pixels of a deepfake. A recent study showed that adversarial perturbations bring the performance of state-of-the-art detectors down from over 95% detection rates to less than 27% [12]! Fig. 9 illustrates how adversarial perturbations fool deepfake detectors. To the left of the figure, a hacker takes a source and target image and creates a deepfake from them by using a generator and discriminator as we previously discussed. The colored pixels on the black patch in the center represent the adversarial perturbations. In the figure, the perturbations are scaled-up by a factor of 100 so that they are visible. The actual adversarial perturbations are so small that they are virtually imperceptible to the human eye. The hacker can create an adversarially perturbed deepfake (visualized at the bottom) by superimposing these perturbations on the original deepfake. The perturbations are so small that the original deepfake and the adversarially perturbed one look essentially the same. Fig. 10 shows additional deepfakes with and without adversarial perturbations. The perturbed and unperturbed deepfakes look identical, yet astonishingly a state-of-the-art detector correctly detects all the unperturbed deepfakes and fails to detect all the perturbed ones.

Figure 9: Deepfake Creation and Adversarial Perturbations. The figure shows an example of adding adversarial perturbations to a deepfake created from source and target faces. The perturbations in the center are scaled-up by a factor of 100 since the actual adversarial perturbations are imperceptible to the human eye. A deepfake detector fails to detect the adversarially perturbed image even though it correctly detects the identical-looking unperturbed deepfake [12].

(a) Deepfakes without perturbations. A detector correctly classifies these as fake.

(b) Adversarially perturbed deepfakes. A detector incorrectly classifies these as real.

Figure 10: Deepfakes With and Without Adversarial Perturbations. Perturbed deepfakes look identical to the original unperturbed deepfakes but, unlike them, fool deepfake detectors [12].

Techniques such as adversarial perturbations have dire implications. The authors of the adversarial perturbation study provide an analogy: If we think of a deepfake as a virus and a detector as a vaccine, adversarial perturbations are a mutation [13]. Just like one tiny mutation of a virus might render a vaccine useless, tiny perturbations of an image can do the same to state-of-the-art deepfake detectors. Adversarial perturbations are enabling the spread of misinformation that not only fool humans but machines as well. This means that today, we can create deepfakes that are virtually impossible to detect. Unless detection methods catch up soon, our world could devolve into one of deception and doubt. For now, the next time you see an image or video online, it would be wise to be suspicious.

Additional Resources

Further Reading

- Facebook’s investment for detecting deepfakes: https://techcrunch.com/2019/09/05/ facebook-is-making-its-own-deepfakes-and-offering-prizes-for-detecting-them/

- Twitter’s new policy of handling synthetic media: https://blog.twitter.com/en_us/ topics/company/2019/synthetic_manipulated_media_policy_feedback.html

- The state of deepfake detectors: https://www.theverge.com/2019/6/27/18715235/deepfakedetection-ai-algorithms-accuracy-will-they-ever-work

- Story on the adversarial perturbations research: https://viterbischool.usc.edu/news/ 2020/05/fooling-deepfake-detectors/

Multimedia Applications

- Deepfake Video of Obama: https://www.buzzfeednews.com/article/davidmack/obamafake-news-jordan-peele-psa-video-buzzfeed

- Deepfake Video of Elon Musk and Jeff Bezos in Star Trek: https://www.cnet.com/ news/elon-musk-jeff-bezos-and-star-trek-collide-in-uncanny-deepfake/

- Online Website that Creates Deepfake Images for Free in Seconds: https://reflect.tech/ faceswap/hot

Glossary

- Adversarial Perturbations: Small, strategically chosen modifications made to the pixel values of a deepfake in order to fool detectors.

- Decoder: A neural network in a generator that applies the local information of the target onto the output of the encoder to obtain a deepfake.

- Deepfakes: Media manipulated by deep learning that depict a person as performing an action they did not perform. Common formats of a deepfake include images and video.

- Deep Learning: Deep learning is a subset of artificial intelligence that studies neural networks.

- Discriminator: A neural network that evaluates the performance of a generator by classifying images as real or fake.

- Encoder: A neural network in a generator that preserves the global information of the source in the deepfake.

- Generative Adversarial Network (GAN): A competitive framework used to tune the parameters of a generator.

- Generator: A neural network that consists of an encoder and decoder which creates a deepfake from a source and a target image.

- Global Information: Information such as facial expressions and actions that can be applicable to any individual.

- Hidden Neuron: A unit in a neural network with parameters that can be tweaked in order to change the neural network’s output.

- Local Information: Information such hairstyle that describes the characteristics of a particular individual.

- Modeling a Function: Using a mathematical structure such as a neural network to represent (often approximately) the transformation that a function performs.

- Nash Equilibrium: The state when a generator excels at its task and the best a discriminator can do to classify the generator’s output is to guess randomly.

- Neural Network: Mathematical structures inspired by the structure of the brain which are used to model mathematical functions. These are known more formally as artificial neural networks.

- Source: The image whose global information we want to capture in a deepfake.

- Target: The image whose local information we want to apply to a deepfake.

- Universal Function Approximator: A property of neural networks that allows them to accurately model almost any function.

References

- A. Kooser, “Elon musk, jeff bezos and star trek collide in uncanny deepfake,” February 2020. [Online]. Available: https://www.cnet.com/news/elon-musk-jeff-bezos-and-startrek-collide-in-uncanny-deepfake/. [Accessed Feb. 27, 2020].

- T. T. Nguyen, C. M. Nguyen, D. T. Nguyen, D. T. Nguyen, and S. Nahavandi, “Deep learning for deepfakes creation and detection,” 2019. [Online]. Available: https://arxiv.org/abs/1909.11573. [Accessed Jan. 25, 2020].

- R. Metz, “The number of deepfake videos online is spiking. most are porn,” October 2019. [Online]. Available: https://www.cnn.ph/business/2019/10/8/Deepfake-videos-porn.html. [Accessed Jan. 25, 2020].

- J. Vincent, “New ai deepfake app creates nude images of women in seconds,” June 2019. [Online]. Available: https://www.theverge.com/2019/6/27/18760896/deepfake-nudeai-app-women-deepnude-non-consensual-pornography. [Accessed Jan. 25, 2020].

- D. Harwell, “Dating apps need women. advertisers need diversity. ai companies offer a solution: Fake people,” January 2020. [Online]. Available: https://www.washingtonpost.com/technology/2020/01/07/dating-apps-need-womenadvertisers-need-diversity-ai-companies-offer-solution-fake-people/

- D. Mack, “This psa about fake news from barack obama is not what it appears,” April 2018. [Online]. Available: https://www.buzzfeednews.com/article/davidmack/obama-fakenews-jordan-peele-psa-video-buzzfeed. [Accessed Jan. 25, 2020].

- D. O’Sullivan, “Now fake facebook accounts are using fake faces,” December 2019. [Online]. Available: https://edition.cnn.com/2019/12/20/tech/facebook-fake-faces/index.html. [Accessed Jan. 25, 2020].

- D. Coldewey, “Facebook is making its own deepfakes and offering prizes for detecting them,” Sep 2019. [Online]. Available: https://techcrunch.com/2019/09/05/facebook-ismaking-its-own-deepfakes-and-offering-prizes-for-detecting-them/. [Accessed Jan. 25, 2020].

- D. Harvey, “Twitter: Help us shape our approach to synthetic and manipulated media,” November 2019. [Online]. Available: https://blog.twitter.com/en_us/topics/company/ 2019/synthetic_manipulated_media_policy_feedback.html. [Accessed Jan. 25, 2020].

- K. Hornik, M. Stinchcombe, H. White et al., “Multilayer feedforward networks are universal approximators.” Neural networks, vol. 2, no. 5, pp. 359–366, 1989.

- I. Goodfellow, J. Pouget-Abadie, M. Mirza, B. Xu, D. Warde-Farley, S. Ozair, A. Courville, and Y. Bengio, “Generative adversarial nets,” in Advances in neural information processing systems, 2014, pp. 2672–2680.

- A. Gandhi and S. Jain, “Adversarial perturbations fool deepfake detectors,” arXiv:2003.10596 [cs], Mar. 2020.

- B. Paul, “Fooling deepfake detectors,” May 2020. [Online]. Available: https://viterbischool.usc.edu/news/2020/05/fooling-deepfake-detectors/. [Accessed May 8, 2020].