Abstract:

The world is in a mental health crisis in which the number of people who need mental health services far exceeds the amount of people who are able to provide it. One way to address this issue is through the use of therapy-based chatbots. Modern therapy-based chatbots utilize a rule-based model, which is good for structured therapies such as cognitive behavioral therapy. Generative chatbots in the form of recurrent neural networks with long and short term memory are able to generate their own unique responses, which could create chatbots that could handle more varied therapies such as psychoanalysis. Current roadblocks such as a lack of data have been a bottleneck in the creation of more advanced mental health chatbots. Despite issues with current models, therapy-based chatbots can still have a huge impact due to the sheer amount of people they can help.

Introduction:

The world is in a mental health crisis. 29% of individuals are experiencing or will experience some sort of mental disorder within their lifetime [1]. The global supply of mental health workers is low with only 9 psychiatrists per 100,000 people in developed countries and 0.1 psychiatrists per 1,000,000 people in low income countries [2]. Mental disorders can be debilitating and the resources available to help are sparse. Many people are suffering from symptoms that can be mitigated with the right type of therapy. If there were a way to make these therapies more available and accessible, then millions of lives could be improved.

One way to help these people is through the use of chatbots. Chatbots are machine learning and/or AI-based technologies that use data, algorithms and models to produce human sounding text and audio [2]. Chatbots vary in complexity, spanning from simple customer service chatbots to advanced general language chatbots such as ChatGPT [3]. With their ability to generate appropriate responses and maintain human-like conversations, chatbots have started to make their way into fields such as therapy and psychiatry. The use of chatbots as therapists is exciting for the field of mental health as it helps deal with the mismatch between supply and demand for mental health workers. Obstacles such as cost, accessibility, and judgment, as seen in traditional therapy, are significantly reduced with the use of chatbots [4][5].

Although chatbots are not at the level of human therapists yet, they have made significant progress in therapies that have a structured format with predictable responses [2]. One such therapy is Cognitive Behavioral Therapy (CBT). There are many aspects to CBT, but the core idea is that psychological problems are, in part, based on unhelpful ways of thinking which can be replaced with better patterns of thought to improve one’s mental health [6]. For example, if a patient were to say “I have no friends and will never make any,” a cognitive behavioral therapist might respond with “You might have no friends yet, but it is very possible for you to make friends in the future.”

The structure of CBT makes it a suitable candidate for today’s chatbots, especially when compared to more variable therapies such as psychoanalysis, which requires a deep understanding of the patient’s unconscious motivations [7]. To understand why this is the case, one must first understand how a therapy-based chatbot works.

Technical Overview:

The engineering behind therapy-based chatbot works relies on understanding the general structure of Natural Language Processing (NLP): the ability for computers to understand and use human language. The first step is data processing, which works to convert input from a human into a format that can be understood by the computer [8].

Once the input is formatted properly, it can be sent through a trained model. Models used for therapy-based chatbots are either rule-based or generative. Rule-based models are trained to pick the best response out of a predetermined set of responses, while generative models can create their own responses based on the meaning of the patient’s input [2].

Data Processing:

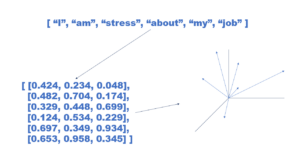

As previously mentioned, in order for a chatbot to understand what a patient is saying, the patient’s statement must first be converted into a format that a computer can understand. At the start of an interaction with a therapy-based chatbot, the patient will input a statement through text. This text must be broken up into smaller pieces through a process called tokenization [11]. Using spaces in the sentence, word tokenization breaks up a text input into its individual words [11]. For example, a patient can enter the sentence “I am worrying about my job,” and the chatbot will divide it up into a list like this: [“I”, “am”, “worrying”, “about”, “my”, “job”]. Each word in the list is called a token.

After the patient’s text is tokenized, each token is altered to make it more familiar to the chatbot. Stemming is an alteration where the prefixes and suffixes of a word are deleted leaving just the root [6]. So the word “worrying” becomes “worry.” Lemmatization is an alteration where words from the input are changed to similar words that the chatbot is more familiar with [6].

The training of the chatbot will be discussed in the next section but one thing to note right now is that a lot of data is required for training. This data acts as a dictionary of words that the chatbot understands. Lemmatization takes an unfamiliar word given by the patient and switches it out with a similar word in the chatbots dictionary [6]. So if the chatbot does not understand the word “worry,” then it might switch it out with the word “stress.” Now the list looks like this:

[“I”, “am”, “stress”, “about”, “my”, “job”].

After the text input is separated and put into its simplest form, it must be converted into a language that the chatbot can understand: numbers [8]. Computers are much better at working with numerical data than with language, so it is important to convert the patient’s input into numbers using a process called vectorization [8]. A vector is simply a line with a length and a direction. Vectors are represented as a list of numbers where each number corresponds to the length of one of the vector’s dimensions. For example, a vector with 3 dimensions consists of 3 numbers [8]. Graphing this vector onto a 3D coordinate system with x, y and z coordinates, the first number in the vector can represent its length in the x-direction, the second number can represent its length in the y-direction and the third number can represent its length in the z-direction.

Vectors can have much more than 3 dimensions. During vectorization for NLP, individual words are converted into vectors with 50-300 dimensions on average [12]. The amount of dimensions depends on factors such as how many words the chatbot is likely to work with and how different the words are from each other [12]. The numerical values making up the word’s vector are determined by how similar or different they are to the other words they are being compared to.

In 3 dimensions, two vectors are closer together when they have similar values for their corresponding components. The same can be said for word vectors in higher dimensions. The closer the vector component values are to each other, the closer the words are in meaning.

The specifications for this comparison of word meanings are determined during the training process, which is discussed in the next few sections. After vectorization, the patient’s text input will look like the list of vectors in Figure 1. While this looks very different from the original sentence the patient started out with, this sentence is now in a suitable format for the chatbot to work with.

Figure 1: A patient’s input tokenized, stemmed, lemmatized and vectorized and mapped onto a graph

Rule-Based:

Once the input from the patient has been processed, it can be used by the chatbot to create an appropriate response. A chatbot does this by running the input through a model that is trained using therapy-specific data [13]. Two categories of models used for therapy based chatbots are rule-based and generative. Most current therapy-based chatbots use rule-based models due to their simple structure [2].

Rule-based models utilize what is called a decision tree [2]. Using therapy related data such as transcripts from therapy sessions, potential inputs from the patient can be tied to potential responses from the chatbot. A patient inputs a response to the chatbot via text, and then it is compared with the available potential responses known to the chatbot [4]. Whichever potential response is most similar to the patient’s input gets triggered and the corresponding response from the chatbot is outputted. The connections between all these potential inputs and outputs form a tree like structure [2]. An example of how a therapy based decision tree might be structured can be seen in the table below.

The similarity between the patient’s input and the chatbot’s stored potential inputs is determined by the amount of similar keywords within the two inputs [4]. Figure 2 shows how a patient’s input might be compared against the chatbot’s stored responses.

Figure 2: A patient’s input being compared to the chatbot’s stored inputs with the most similar one being selected along with its corresponding output

Woebot is a good example of a rule-based, therapy chatbot [14]. Woebot specializes in CBT and has been shown to decrease symptoms of depression and anxiety in its users [14][15]. The product works by prompting the user to talk about how they feel. The user inputs any negative thoughts they are having and Woebot responds with better ways to frame the thoughts so that the user can develop better thinking patterns.

The simplicity of rule-based models make them easy to implement, but it also creates limitations in the depth and flexibility of the responses they can produce [16]. If a patient types a response that is very different from all the potential responses stored in the model, then the model’s response is likely to seem unrelated and awkward to the patient. To do therapies such as psychoanalysis, a chatbot will need to be able to understand the context of what the patient is saying and be able to provide specific responses that directly relate to the patient’s problems. A more advanced model is needed to achieve this, and the understanding of these models starts with a basic understanding of neural networks.

Neural Networks:

The fundamental component of a neural network is the perceptron. A perceptron can be thought of as a set of functions that take in inputs and spit out an output [17]. For example, Figure 3 shows a “negativity-detector” perceptron. This perceptron takes in a word as input and outputs whether the word is negative or not negative. In this case the input is “stress” and the output is “negative.” It’s important to note that the actual input is the vector representation of the word “stress,” but for the purpose of visualizing how neural networks work, word representations will be used for the following examples.

Figure 3: A Negativity Detector based perceptron that outputs “Negative” when a negative word like “Stress” is input

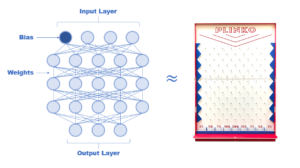

Neural networks are a network of perceptrons connected together in layers [17]. The first layer is called the input layer and this is where the patient’s text starts. The last layer is called the output layer and this is where the final response is generated and output to the user. All the layers between these two layers are called hidden layers. The goal of a neural network is to provide a value for the input layer, send it through the hidden layers and have the model generate a proper response from the output layer.

Training is done by altering the weights and the bias of the network [18]. The weights are the connections between all the perceptrons in the network and the bias is a constant added to the initial input at the beginning of the network.

One can think of the network as a “Plinko board” with a disc falling from the top. The disc represents the input given by the patient. The poles in the board represent the perceptrons and the pockets at the bottom represent the output layer. Changing the sizes of the poles represents changing the weights of the network while changing the size of the disc represents changing the bias of the network. The goal is to change the sizes of the poles and disc so that the disc lands in the desired pocket at the bottom of the Plinko board. The comparison can be seen in Figure 4.

Figure 4: A neural network with an input layer, output layer, weights and bias being compared to a Plinko board

When the model is being trained, data is used to figure out which weights and biases most consistently result in the correct output [18]. The process starts by testing a network with an arbitrary set of weight and bias values. The data is fed through the model and the outputs of each input are compared with the correct outputs for each input to create a cost function [19]. This function represents how inaccurate the model was with the current weights and bias.

For example, a more advanced version of the “negativity-detector” is shown in Figure 5. This model takes in a group of words and determines the negativity level of the group. Each guess by the model is compared with the correct answer with the difference being the result of the cost function for that input. All the values are then added together to get the total cost of the model.

Figure 5: A more advanced negativity detector going through a training iteration and having its cost function evaluated

The goal of a neural network is to decrease this cost function as much as possible [19]. This is done through a method called backpropagation [19]. The math behind backpropagation is beyond the scope of this article but resources on the topic can be found in further readings. For the purposes of this article, backpropagation can be understood as a process that sends the result of the cost function backwards through the neural network.

This cost function showcases how much the weights of each node in the network affected the outcome of the model. Specifically, the function calculates how much the weight of each node has to change to change the overall outcome by a specified amount [19]. In other words, backpropagation measures how sensitive the outcome was to each weight and the bias, and then changes the weights and bias accordingly to try and lower the cost function.

With each iteration of training, the weights and the bias are altered [19]. Over time, patterns start to form as to which set of weights and biases correlate to higher accuracy from the model until an optimal combinationis found. This is when the model is fully trained and ready to be used.

A neural network like this can work well for tasks where the order of the inputs does not matter. For example, in the advanced “negativity-detector,” the order of the words in the group is irrelevant to the group’s negativity level. Language however is sequential in nature. The meaning of a sentence is dependent on the order of its words [20].

For example, a patient could either say “I feel nervous around people and happy by myself” or “I feel happy around people and nervous by myself.” These two sentences contain the same words. However, the order of these words drastically changes the meaning of the sentence. In order to analyze the difference between these two sentences, a more advanced neural network is needed [20].

Recurrent Neural Network:

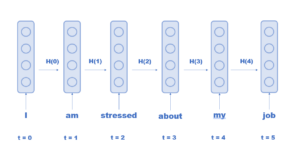

Recurrent neural networks (RNNs) are neural networks that account for the importance of sequence in human language by feeding the output of the model back into itself with each new input [9]. RNN’s are often represented as snapshots of the same neural network as it changes over time, such as in Figure 6. Here, the patient’s text is fed into the neural network one word at a time. However, the result of the neural network influenced by previous words is input with the current word. As a result, the output at t=3 is based on the sequence of words “I am stressed about“ rather than just the word “about.”

Figure 6: A recurrent neural network analyzing a patient’s input one word at a time

With this feature, the model now has the potential to generate its own responses [9]. This is done through an encoder and decoder RNN. The encoder analyzes the patient’s text word by word to get an understanding of the text’s meaning [10]. Once the sentence is completely processed by the encoder, the output is fed through the decoder that generates a proper response, word by word [10]. Similar to the encoder, the words generated later on in the model’s response are reliant on the context of the words generated earlier in the model’s response.

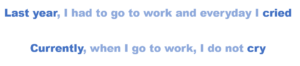

While RNNs allow for text generation, they are still limited in the length of inputs they can accurately respond to due to the “Vanishing Gradient Problem.” This problem arises from the mathematical methods of backpropagation. Conceptually, the vanishing gradient problem occurs when the patient’s input becomes too long [21]. Figure 7 shows how words at the beginning of a sentence can determine what words go at the end of a sentence. In this example, the first few words affect the tense of the word at the end.

Figure 7: The last word of each sentence depends on the first word or words of each sentence

As an RNN gets further down the sentence, the ability for the words at the beginning of the sentence to have an effect on the final output decreases since their influence gets diluted by the increasing calculations done with each iteration of the model [21]. Because of this, a more advanced version of an RNN is needed.

Long Short Term Memory:

Long Short Term Memory (LSTM) is an RNN that has a short term and long term memory [22]. The short term memory acts as a normal RNN, taking note of the influence the last few words have had on the model. The long term memory can remember the influence of words all the way to the beginning of the sentence [22]. The short term memory is called the “hidden state” of the RNN and the long term memory is called the “cell state.”

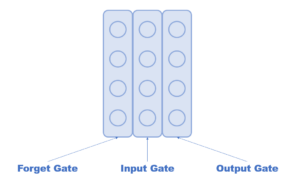

Figure 8 shows the three gates that make up an LSTM. There is the forget gate, the input gate, and the output gate [22]. At the start of each iteration, the current word, the hidden state and the cell state are input into the LSTM. First, the cell state passes through the forget gate which tells the cell gate what information it should forget [22]. This process removes words from the cell state that are not relevant to the understanding of the patient’s input or the generation of the chatbot’s response [23].

Figure 8: An LSTM neural network with a forget, input and output gate

After the proper information has been forgotten, the altered cell state goes through the input gate to determine if the current word should be added to the long term memory or not [22]. This process determines if the current word seems relevant for the understanding of the patient’s text or the generation of the chatbot’s response [23].

Finally the altered cell state and the hidden state are fed into the output gate through which an output is produced [22]. This output can be a new hidden state for the next iteration, the meaning of the text as a whole, or the response generated by the decoder [23].

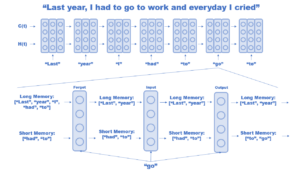

A simplified example of how these three gates work can be seen in Figure 9. Here the sentence “Last year, I had to go to work and everyday I cried,” is input into the LSTM. Each word is input one at a time, along with the long term and short term memory. Once the model gets to the word “go,” all the words so far have been maintained within the long term memory while only the last two words have been maintained in the short term memory. The forget gate takes in all 3 inputs and decides which words in the long term memory are worth forgetting. After this, the long term memory only maintains the words “Last” and “year” because they are relevant to the time in which this sentence is referencing.

Then the input gate uses the inputs to decide if the word “go” is an important word to store in long term memory. Since the word “go” is not important for the meaning of the whole sentence, it is not added. Finally the output gate updates the short term memory with the word “go,” and sends both memories into the model again for the next iteration.

Figure 9: A simplified version of a LSTM in action on a patient’s input

An LSTM has the ability to create unique responses specific to a patient’s needs which makes it a suitable model for a therapy-based chatbot.

Roadblocks:

Even with the availability of methods to create advanced, therapy-based chatbots, there are lots of roadblocks to creating ones that work as well as human therapists. One roadblock is the concern for the amount of impact a therapy-based chatbot can have on a patient. While an incorrect customer service chatbot might annoy a customer, an incorrect therapy-based chatbot can cause serious harm to a patient [4].

Earlier implementations of Woebot have been shown to fail at catching patients in a time of crisis. For example, in a mock conversation where researchers told the chatbot they wanted to jump off a cliff, Woebot responded with “It’s so wonderful that you are taking care of both your mental and physical health” [24]. While Woebot has since fixed this problem, the example can show just how detrimental inappropriate responses can be to a patient. This ability to seriously affect a patient’s health makes the effort to create an accurate chatbot very important. In order to make these chatbots more accurate, another roadblock must be overcome: lack of data.

Because therapy deals with a lot of private information, a lot of the potential data that could be used to train these models is unavailable [4]. This makes a lack of data a unique problem for therapy-based chatbots. For example, the popular mental health chatbot “Evebot” has access to 3 million points of data while one of Google’s chatbots has access to 40 billion points [4]. This lack of data results in poorer responses from therapy chatbots, which can be seen in user surveys. Some users have claimed that the responses from bots were not relevant and repetitive which made the bot seem less like a real person [1]. More therapy-based data needs to be available for chatbots before they can substitute humans.

Lack of data can also pose ethical issues such as machine bias. It has been very common to find bias in the forms of racism, sexism, and false information in current AI-based text systems [3]. This bias is often not intentional but rather a result of having data sets that are unrepresentative of the actual population. If training data sets fail to have an accurate representation of minority groups, then chatbots trained with this data might fail to help these groups effectively [5]. While more data is needed overall, it is also important to ensure the quality of the data to make sure no demographic gets underrepresented.

Another potential roadblock to therapy-based chatbots is the concern of addiction. Increased accessibility to therapy through these chatbots can become a problem if people become too attached [5]. These chatbots are optimized to best serve their patients. Having access to a chatbot that perfectly caters to one’s needs might decrease the patient’s motivation to seek support from friends and families and result in further isolation [5]. As chatbots become better therapists, restrictions might have to be placed on how intertwined the bot and the patient can become in order to avoid chronic use.

Conclusion:

Although therapy-based chatbots have hurdles to overcome,it is important to ask, “Is some therapy better than none?” The current chatbots are not perfect and are in no shape to replace traditional therapists. Despite all these problems, chatbots can still provide support to millions of people out there who do not have any access to therapy. Woebot is not the same as a professional cognitive behavioral therapist, but it can still provide a net positive effect on its users’ mental health. As more data is made available, therapy-based chatbots are bound to get better. With each improvement, one can be excited for a potential world where the ratio of therapists to patients goes all the way down to 1:1.

Further Readings:

- On Neural Networks and how the Algorithms Work:

3Blue1Brown, “ But what is a neural network? | Chapter 1, Deep learning” Oct. 5th, 2017, Available: https://www.youtube.com/watch?v=aircAruvnKk&list=PLZHQObOWTQDNU6R1_67000Dx_ZCJB-3pi [Accessed Sept 29th, 2023]

- On Cognitive Behavioral Therapy:

“What is Cognitive Behavioral Therapy?” apa.org, 2017 [Online] Available: https://www.apa.org/ptsd-guideline/patients-and-families/cognitive-behavioral#:~:text=CBT%20treatment%20usually%20involves%20efforts,behavior%20and%20motivation%20of%20others. [Accessed Sept 28th, 2023]

- Review of Woebot:

- Bradley. “I Tried the Woebot AI Therapy App to See if It Would Help My Anxiety and OCD” verywellmind.com, Aug. 24th, 2023, [Online] Available: https://www.verywellmind.com/i-tried-woebot-ai-therapy-app-review-7569025#:~:text=I%20liked%20that%20Woebot%20gave,I%20felt%20like%20in%20the [Accessed: Sept 28th, 2023]

- M. A. Nielsen, Neural networks and deep learning,

http://neuralnetworksanddeeplearning.com/chap2.html (accessed Dec. 12, 2023).

- Youtube, 2021. codebasics, “Vanishing and exploding gradients | Deep Learning Tutorial 35 (Tensorflow, Keras & Python)” Youtube, January 23, 2021

Bibliography:

[1] AA. Abd-alraza, “Perceptions and Opinions of Patients About Mental Health Chatbots:

Scoping Review,” JMIR, Volume 23, Issue 1, Jan 2020.

[2] AA. Abd-alraza, “An overview of the features of chatbots in mental health: A scoping

review,” IJMEDINF, Volume 132, Dec 2019.

[3] KD. Darling. (2023, March. 12). “Rise of the therapy chatbots: Should you trust an AI with

your mental health?” https://www.sciencefocus.com/news/therapy-chatbots-ai-mental-

health

[4] BX. Xu, “Survey on psychotherapy chatbots,” CPE, Volume 34, Issue 7, Dec 2020.

[5] KD. Denecke, “Artificial Intelligence for Chatbots in Mental Health: Opportunities and

Challenges,” in Multiple Perspectives on Artificial Intelligence in Healthcare, MH.

Househ, Switzerland: Springer Nature, 2021, pp.115-128.

[6] VG. Gupta, “Chatbot for Mental health support using NLP,” in International Conference for

Emerging Technology (INCET), Belgaum, India, 2023.

[7] “Different approaches to psychotherapy,” American Psychological Association,

https://www.apa.org/topics/psychotherapy/approaches (accessed Dec. 12, 2023).

[8] “Word Embeddings” codeacademy.com, [Online] Available:

https://www.codecademy.com/learn/retrieval-based-chatbots/modules/nlp-word-embed

dings/cheatsheet [Accessed Sept 19th, 2023]

[9] Youtube, 2021. codebasics, “What is Recurrent Neural Network (RNN)? Deep Learning

Tutorial 33 (Tensorflow, Keras & Python)” Youtube, January 12, 2021

[10] “SEQ2SEQ model in machine learning,” GeeksforGeeks,

https://www.geeksforgeeks.org/seq2seq-model-in-machine-learning/ (accessed Dec. 12,

2023).

[11] A. Menzli, “Tokenization in NLP: Types, Challenges, Examples, Tools” neptune.ai, Aug.

11th, 2023. [Online]. Available: https://neptune.ai/blog/tokenization-in-nlp [Accessed

Sept 19th, 2023]

[12] baeldung, “Dimensionality of word embeddings,” Baeldung on Computer Science,

https://www.baeldung.com/cs/dimensionality-word-embeddings#:~:text=Most%20com

monly%2C%20word%20embeddings%20have,lower%20dimensions%20are%20also%

20possible. (accessed Dec. 12, 2023).

[13] K. Kalinin “Founder-Friendly Guide to Building a Mental Health Chatbot: Analyzing

Alternative Approaches” topflightapps.com, Aug. 31st, 2022 [Online] Available:

https://topflightapps.com/ideas/build-mental-health-chatbot/ [Accessed Sept 19th, 2023]

[14] SR. Reardon. (2023, June. 14). “AI Chatbots Could Help Provide Therapy, but Caution Is

Needed” https://www.scientificamerican.com/article/ai-chatbots-could-help-provide-thera

py-but-caution-is-needed/#:~:text=People%20seeking%20psychological%20help%20ofte

n,can’t%20ultimately%20replace%20therapists.

[15] Woebot Health. 2017 [Online] Available: https://woebothealth.com/ [Accessed Sept 28th,

2023]

[16] KR. Rani, “A Mental Health Chatbot Delivering Cognitive Behavior Therapy and Remote

Health Monitoring Using NLP And AI” in International Conference on Disruptive

Technologies (ICDT), India, 2023.

[17] C. Woodford, “How neural networks work – a simple introduction,” Explain that Stuff,

https://www.explainthatstuff.com/introduction-to-neural-networks.html (accessed Dec.

12, 2023).

[18] M. A. Nielsen, Neural networks and deep learning,

http://neuralnetworksanddeeplearning.com/chap1.html (accessed Dec. 12, 2023).

[19] M. A. Nielsen, Neural networks and deep learning,

http://neuralnetworksanddeeplearning.com/chap2.html (accessed Dec. 12, 2023).

[20] A. Biswal, “Power of recurrent neural networks (RNN): Revolutionizing ai,” Simplilearn.com,

https://www.simplilearn.com/tutorials/deep-learning-tutorial/rnn (accessed Dec. 12,

2023).

[21] Youtube, 2021. codebasics, “Vanishing and exploding gradients | Deep Learning Tutorial 35

(Tensorflow, Keras & Python)” Youtube, January 23, 2021

[22] S. Saxena, “What is LSTM? introduction to long short-term memory,” Analytics Vidhya,

https://www.analyticsvidhya.com/blog/2021/03/introduction-to-long-short-term-memory

-lstm/#:~:text=Bidirectional%20LSTMs%20 (accessed Dec. 12, 2023).

[23] Youtube, 2021. codebasics, “Simple Explanation of LSTM | Deep Learning Tutorial 36

(Tensorflow, Keras & Python)” Youtube, February 6, 2021

[24] G. Browne. “The Problem With Mental Health Bots,” WIRED Oct. 1st, 2022 [Online],

Available:https://www.wired.com/story/mental-health-chatbots/#:~:text=In%202018

%2C%20it%20was%20found,reports%20of%20child%20sexual%20abuse [Accessed:

Sept 19th, 2023]