We go to the movie theater expecting to be tricked into believing a story that takes place in another world often beyond our imagination. But we’ve all seen the behind-the-scenes look at our favorite films of the actor or actress we love standing in front of a green screen with a bodysuit on and little spheres taped to them. Even during the film itself, you can often tell that the actor is trying their best to communicate with another character or environment that simply isn’t there; and it can ruin the film. We want the film to feel real in every scene as if the actors are facing real danger, real environments, and real heartbreak.

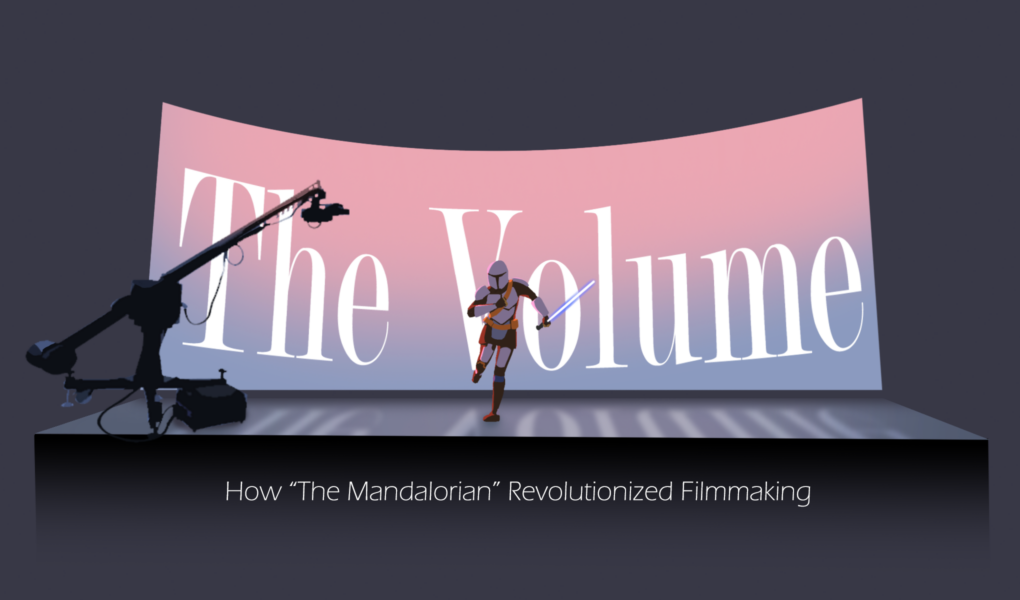

Complete use of CGI (computer-generated imagery) in post-production instead of a real environment can damage the “magic” of filmmaking if not done with extreme care and millions of dollars. However, the creators of the hit show, “The Mandalorian,” took a different route to solve this issue [1]. They leaned into new technology, leading them to build a cylindrical wall that is an entirely digital screen with the capability to create any desired environment around the actor during or even before filming as opposed to after.

Fig. 1 Behind the scenes shot of the filming of a closeup shot in “The Volume” with props and fully built set in front of a generated backdrop. Source: [5]

As new as this technology may sound, it is actually a play on a much older version of Hollywood trickery. One could argue that this is taking a step back to fake backdrops on mural-sized walls with clouds painted on them like you might have seen at Universal Studios or in your grandfather’s favorite Western. However, this 270-degree wall of LED screens can create an environment around an actor that would make your grandfather’s dentures fall into his lap. They call it “The Volume,” a technology developed and owned by the Disney company, Industrial Light & Magic (ILM) [2].

The creatives and film producers at Disney needed to formulate a way to create the unique settings of Star Wars on a budget that would be manageable for a TV show as opposed to the typical blockbuster movie. The unique solution they came up with actually stemmed from trying to solve a much simpler problem. If you’re a fan of the show, or even just familiar with it, you know that the main character wears a stunning suit of reflective armor during the entirety of the show’s runtime, and only takes his helmet off once or twice. This armor might as well be a mirror that could potentially show the crew standing behind the camera and would definitely reflect green screens that would be needed if the environments of the show were created with CGI. So, the reflective suit alone was going to cause “costly problems in post-production” [2]. As the technology was being developed to solve this issue, the engineers at ILM saw the potential for something more, and “The Volume” was built, revolutionizing the way projects like this could be filmed.

The first version of “The Volume” created for season 1 of “The Mandalorian” was a curved set of connected screens standing 20 feet tall and spanning 180 feet in circumference [2]. When standing inside the cylinder, it reached 75 feet across and folded 270 degrees around the camera and actors. That’s large enough to fit two school buses lengthwise. In case you were worried about the extra 90 degrees in the cylinder left open, there were also two 18 by 20-foot screens on a motorized track that could move in and fill the spaces when more backdrop was needed. And to literally top it all off, there was an LED video ceiling that fit atop the 75-foot cylinder to provide the perfect environment from all viewpoints.

The physical nature of the cylinder most directly solves the issues of lighting. The wall of video consists of 1,326 individual LED screens that are connected and appear seamless and contain a 2.84 mm pixel pitch, meaning the distance between each pixel on the screen [2]. A smaller pixel pitch means a clearer picture, and for reference, the recommended viewing distance of pixels of that orientation is just 16 feet [3]. This creates a remarkably clear image with plenty of wiggle room for the camera to move throughout the set. The LED screen creates little to no glare and replaces any light that would be required to light each scene and the actors in it.

The sheer size and beauty of the cylinder are hardly the most impressive elements of “The Volume.” The big and beautiful screen still requires generated images to display during filming. These images also have to carry depth to make the backdrop of each shot look like more than a two-dimensional wall. To accomplish this, the engineers at ILM paired up with Epic Games, along with their “Unreal Engine,” to create adjustable imaging that appears 3-dimensional [4].

“Unreal Engine” is a game engine, or computer programming software that is used to build and design 3-dimensional and strikingly realistic video games. Originally created for first-person shooter games which required “displaying graphics in real time,” the engine uses the mathematical computing capabilities of the hardware in modern computers. It is able to calculate the same 3D-rendered imaging and “photorealistic lighting solutions that were previously only available on traditional CPU path-traced solutions found in film and TV” [4].

In layman’s terms, engineers at ILM and Epic Games were able to use the “Unreal Engine” software paired with the hardware of “The Volume” to compute and display a 3D environment that can move and react to movement similar to a user’s character in today’s hottest video games. “Eleven interlinked computers produce the images on the wall of The Volume”, and the engine creates constant displays of the correct lighting, depth, and perspective that someone might see if that world were real [5]. For someone less familiar with these types of games, the software can create a world that appears the same way we see with a Virtual Reality headset or the world through the human eye. As the viewpoint moves through a room like a person would walk, the environment reacts and corrects the perception and depth of the objects and walls around it to make a realistic experience.

With the use of this technology in the shooting of “The Mandalorian,” this character viewpoint was analogous to the camera used to shoot each scene of the show. For this to happen, the “Unreal Engine” needed to track the camera’s position with an infrared camera tracking system from Profile Studios that was mounted on top of the LED screens. The infrared cameras kept track of the camera on an XYZ plane, and the information was sent back to the “Unreal Engine” and the ILM “Volume” to be rendered in 3D, in real time [2]. Engineers alongside the filmmakers were then able to move the camera anywhere inside “The Volume” while rolling and the screens would adapt to make the backdrops 3D from the view of the camera.

Fig 2. A generated visual of how the infrared cameras track the camera in use in order to render the 3D backdrop from the viewpoint of that camera. Source: [5]

The engineers and creative filmmakers behind “The Volume” were able to solve some of the biggest issues with filmmaking, making the process more efficient. The first and most obvious solution “The Volume” provides is fixing any and all issues with light. Movies and TV shows are often very limited during production when a scene needs to be shot at a specific time of day. The window becomes very small when the desired scene is at sunset for example, but with “The Volume,” that same shot can be perfect for an entire day or even weeks of filming.

The need for extensive set design is also reduced because 3D objects can be produced on the screens of the set itself. For “The Mandalorian,” something like a spaceship is often needed for a scene but only the section that the actor is interacting with needs to be built. The rest of the ship can be rendered on the screens behind it, as well as any other extravagant world that may be required. Due to the dimensions of “The Volume,” no set larger than a 75-foot span needs to be built.

Along with the background itself, special effects and obscure changes requested by the director can be implemented on the fly. Everything from small changes like sparks flying off machinery, to a complete change in the background, can be added by the directors with a few pokes and drags on an iPad [6]. To further prevent the need for CGI, when a real location is used for a scene, “teams of photographers photographically ‘scanned’ various locations, such as Iceland and Utah” [5]. Therefore, virtually any environment, new or existing, can be all filmed in one place and edited at the director’s request, saving countless dollars on travel and postproduction.

Fig 3. A moving camera captures the actors moving through the set as live effects such as sparks are played on the LED backdrop. Source: [6]

As far as the filmmaking experience, the use of “The Volume” also benefits the actors and crew by allowing everyone to fully understand the environment of a scene. The actors can fully lean into the world around them and it prevents actors from each having “a different concept of what is occurring around them” [5]. This is a substantial upgrade from actors staring at a green screen and simply imagining whatever monsters or worlds may be around them. How can anyone misunderstand the environment if they are practically always standing in it? And if the actor believes the scene, the audience believes the scene.

Fig 4. A generated wide-angle environment is displayed behind the actors as they film season 2 of “The Mandalorian”. Source: [7]

“The Volume” has since been upgraded and is proving to be invaluable to the film production process for “The Mandalorian.” According to one of the directors, Robert Rodriguez, the production of the show is now filming 30 percent to 50 percent faster than it would if greenscreens were still the primary method [7]. On top of being more efficient, “The Volume” has been used for blockbuster films such as “Thor: Love and Thunder” and “Ant-man and the Wasp: Quantumania,” and now films outside of Disney’s scope like Warner Bros.’ “The Batman” [8]. The bottom line is that “The Volume” improved filmmaking by making it more versatile, financially effective, and most importantly efficient. ILM, Epic Games, Disney, along with a few other companies have managed to collaboratively reengineer the filmmaking process in a beautiful, and efficient way with “The Volume”, and the film industry will likely never be the same.

References

[1] Favreau, J. (2019). The Mandalorian. whole, Disney Plus.

[2] J. Holben, “This Is the Way,” American Cinematographer, pp. 15-18,20,22,24,26,28,30,32,

- Available: http://libproxy.usc.edu/login?url=https://www.proquest.com/magazines /this-is-way/docview/2350120747/se-2.

[3] “Recommended viewing distance & direct view LED – planar.” [Online]. Available:

https://www.planar.com/media/439462/understanding-viewing-distance.pdf. [Accessed:

07-Feb-2023].

[4] K. Ciaran Kavanagh and R. Brian Rossney, Reimagining Characters With Unreal Engine

MetaHuman Creator: Elevate Your Films With Cinema Quality Character Designs and

Animation. Packt Publishing, 2022.

[5] S. Researcher, “How AR and VR are changing film: A look at the revolutionary stagecraft,

the volume,” AMT Lab @ CMU, 13-Jul-2021. [Online]. Available: https://amt-

lab.org/blog/2021/7/how-ar-and-vr-are-changing-film-a-look-at-the-revolutionary-

stagecraft-the-volume. [Accessed: 06-Feb-2023].

[6] K. Baver, “This is the way: How innovative technology immersed us in the world of the

mandalorian,” StarWars.com, 15-May-2020. [Online]. Available:

https://www.starwars.com/news/the-mandalorian-stagecraft-feature. [Accessed: 06-

Feb-2023].

[7] D. Coldewey, “How ‘The Mandalorian’ and ILM invisibly reinvented film and TV

production– TechCrunch,” TechCrunch, AOL Inc, New York, 2020.

[8] H. A. Lee, “Another upcoming Marvel movie is using the mandalorian’s VFX

Technology,” Looper, 17-May-2021. [Online]. Available:

https://www.looper.com/412918/another-upcoming-marvel-movie-is-using-the-

mandalorians-vfx-technology/. [Accessed: 06-Feb-2023].