Abstract:

The engineering aspect of computer-generated imagery (CGI) is a cross-disciplined area involving computer science, physics, and mathematics. The verisimilitude of CGI is often achieved by various techniques that lead to our amazement. I will introduce four commonly used techniques in CGI production: ray-tracing, physics-based animation, randomized noise, and machine learning, as well as show their applications in CGI artworks. In some techniques, engineers try to simulate the precise details of the virtual scene as close as possible to the real world, while in other techniques, engineers try to create an illusion of realism that tricks human eyes. These graphics techniques combined create an interesting blend of engineering and arts.

Today we see computer-generated imagery (CGI) almost everywhere in our life: films, games, magazines, advertisements, and in so many more applications. Sometimes we may even stare at an image or video without noticing it is actually CGI.

Although we often give credit to “computer technology” when we are amazed by modern CGI’s verisimilitude, the engineering aspects behind computer graphics include not only computer science, but also physics and mathematics. In this article, I will introduce four commonly-used techniques that craft CGI and explain how computer graphics engineers leverage their engineering knowledge to produce amazing artistic effects.

Before we dive right into the technology. Let’s do a small quiz on how sharp your eyes are in spotting whether an image is CGI. Don’t be ashamed if you find it hard to make the right choice, and you will learn why your eyes are tricked after you finish the article. The answers are attached at the end. Have fun!

Figure 1: Vicon Siren, a digital CGI character created by Unreal Engine. [1]

CG vs. Photo Challenge

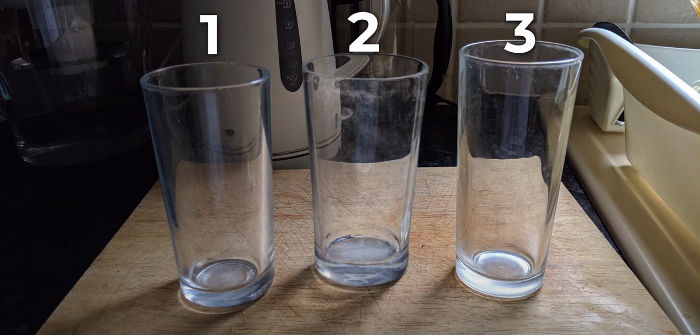

- There are two real glass cups and one CGI cup in the image below. Which one is fake?

Figure 0: Images of Three Glass Cups [2]

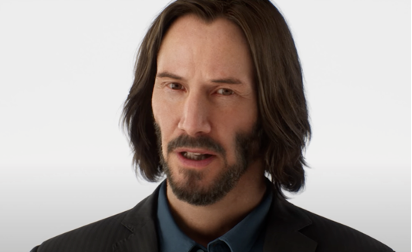

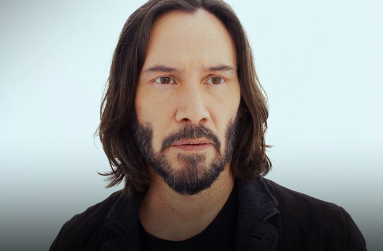

- Among the two images of Keanu Reeves, can you tell which one is the real Keanu?

Figures 3, 4: Keanu Reeves from a film footage piece and a video game trailer [3][4].

- Among the four faces below, can you tell which one(s) is real and which one(s) is fake?

Figures 5 – 8: Photos of real humans’ faces mixed with computer-generated images [5].

“Let there be light” – The Magic of Lights and Shadows

Everything in our physical world comes with shadows and lights. By looking at them, we are able to perceive the volume and dimensions of objects even on a flat picture. Back in the Renaissance age, artists like Leonardo da Vinci had already discovered that proper use of highlights and shadows makes their paintings more vivid to life, and they developed the painting technique called Chiaroscuro in an attempt to draw light and darkness accurately. However, capturing every single detail of lighting via artists’ eyes and brushes is challenging. This is when computers come to the stage and outperform humans – the machines can calculate the most precise setup of lights and shadows by strictly following light’s physics properties and then draw them on the digital screen within milliseconds.

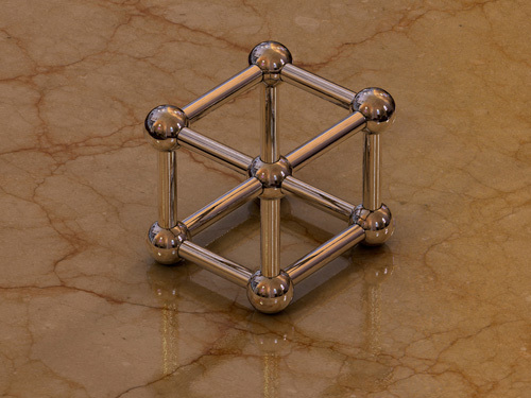

Figure 9: If looking carefully at this image, you will notice that it is impossible to have this object existing in the physical world. However, because the highlights and shadows are drawn in a seemingly accurate way, this CGI creates an illusion that it is “real” [6].

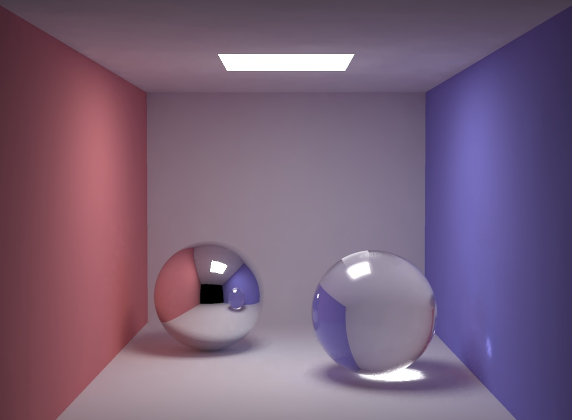

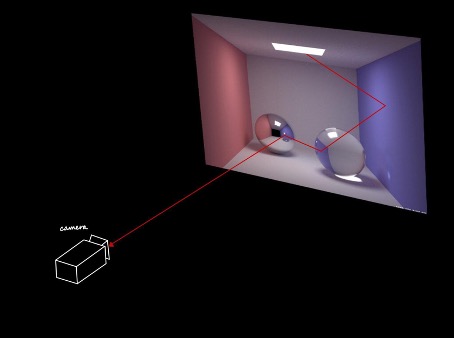

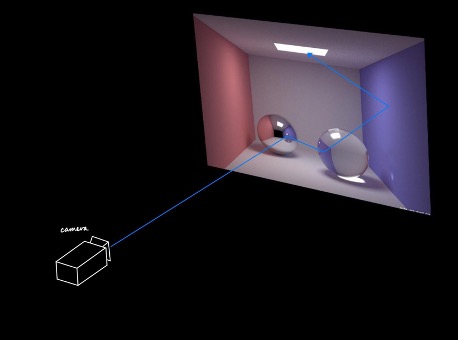

One of the primary illumination rendering techniques run by computers is called “ray tracing” [7]. Imagine that we have a virtual scene set up to look like the CGI in figure 10, where there is a translucent glass ball, a reflective metal ball, walls of different colors, and a light source on the top. We also have a virtual camera that is going to “take the photo”. How would the camera be able to capture the scene? The answer is to apply the physics property of the light ray. The light source is emitting infinite light rays into the scene in all directions. Assume we pick one light ray, like the red trace shown in figure 11. That ray starts from the light source with white color, hits the purple wall, part of its light intensity is absorbed by the wall and the other part is reflected towards the glass ball with a purple color, then deflects through the glass ball towards the metal ball, and eventually reflects towards the camera. Once you press the shutter of the camera, that light ray will be captured and becomes a purple pixel on your image. This is called “forward ray-tracing”, and this is also how the camera works in the physical world.

However, forward ray-tracing cannot be easily implemented in computers, because theoretically any light source emits “infinite” light rays and our computers cannot handle infinite computation. To make infinite computations finite, we will apply another physics law: the optical paths are reversible, meaning that we can simply reverse the process and trace the light ray’s path from the camera to the light source, as shown in figure 12. This technique is called “backward ray-tracing”, and using this technique we only need to trace as many light rays as the number of pixels you want for your image. For each light ray, the computer will compute the location where it intersects with the objects in the scene, the angle of the reflected or deflected ray, and whether it can reach any light sources. If the ray reaches a light source, the resulting pixel will be a highlight; otherwise, it will be in shadow. By considering other factors like the texture of the intersecting objects and computing them in render equations, we can get the vivid color of the pixel where that ray starts.

This idea may not sound too complicated, but it is too much work for a human; drawing a picture of 2560 * 1440 resolution will require us to trace one ray for each of the 3 million pixels. Even if you could calculate fast enough to get one pixel done every second, it would still take you one and a half months to complete. However, this is no longer a burden for modern computers equipped with graphical programming units (GPU), most of which are able to render more than 24 frames of 2K CGI images for a complicated scene of millions of triangles in one second [8]. The technique of ray-tracing not only tricks our eyes by producing physically accurate lights and shadows, but also saves artists’ time from painstakingly drawing every single brushstroke of Chiaroscuro.

Figure 10: An example of a ray-tracing scene [9].

Figures 11, 12: Forward (red) and backward (blue) tracing of a single light ray.

Newton’s Laws Are Never Old – Physics-Based Animation

The proper setup of illumination makes a static CGI look realistic to our eyes, but to make an animated CGI video appear vivid, the graphics engineers and artists also need to make sure they follow the laws of physics. Imagine you are watching a video of a ball falling to the ground, if the ball falls at a constant speed and stops as soon as it touches the ground, you can immediately tell this is fake; whereas if the ball follows the physics laws by accelerating its speed when it falls and bouncing a couple of times when it hits the ground, you will be inclined to think it looks real. In the CGI industry, the physics laws are more than validation rules that help artists examine if the graphics look right; they serve as a driving force that leads artists to create animations in a certain way, or “physics-based animation”. Unlike traditional animators who have to manually draw how objects move in their films, CGI artists let the virtual objects interact with each other in their rendering software and record their motions with the virtual camera.

Let’s say we are going to reproduce the animation effect of Agent Smith being punched in the face in the film Matrix Revolution for our example. With histological knowledge of skins and muscles, graphics engineers could regard the face as a mass-spring system. They could assign physical properties like mass to skin tissues as vertices and spring-coefficients to the muscles as edges connecting the skin tissues. Around 600 pieces of such skin and muscle elements will give us a mass-spring system for a satisfactory animation of facial expression [10]. The animators will set up Agent Smith’s idle facial expression in a way so that the forces between skin vertices are in equilibrium. Now we want to generate the animation of punching into Smith’s face. To do so we will create a 3D fist model into the scene with a certain amount of velocity and force that heads towards Smith’s face. Once they collide, a displacement will be added upon some of the skin vertices on Smith’s face, causing the equilibrium of the spring-mass system to break. The physics engine will then handle all the calculations to update the positions of and forces between each skin vertices over time, which, on the basic level, is simply to solve a bunch of equations made up of Newton’s Second Law (F = ma) and Hooke’s Law (F = -kx). By capturing the simulated skin vertices at each time frame, we are able to get a realistic animation of Agent Smith’s facial muscle distorting right after the punch.

Figure 13: An image of Agent Smith getting punched in Matrix Revolution. This effect is created by CGI [11].

Make it Imperfect – The Seasoning of Random Noise

Many times people can recognize an image is CGI not because it is poorly made, but because it looks too perfect: symmetric, unwrinkled, uniform, and spotless. Our brains tend not to believe perfect objects could exist in the real world. To fight against the issue of being too perfect, CGI artists and engineers will intentionally make the texture defective, for example, by adding pores or freckles to the skin, adding grains on the soil, and adding cracks on the rocks. It will be a painstaking job for a human artist to create every tiny detail, but random noise algorithms can be a big help by generating millions of such little imperfections within milliseconds.

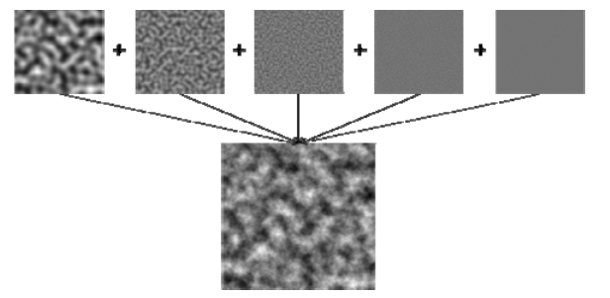

The Perlin Noise is one of the most commonly used noise algorithms for realistic CGI textures. To understand how it works, we must first learn the objectives of a noise algorithm: First, create random data. For example, create an image where we cannot observe any obvious repetitions. Second, make sure the transition between data is smooth. For example, it is easy to give a random color to every single pixel of a drawing, but the resulting image will be a mess. Instead, we want the transition from pixel to pixel to be smooth.

To achieve these objectives, the Perlin Noise algorithm will first generate multiple random datasets of different scales; an analogy is to draw several images completely randomly on paper of different sizes. It will then smooth every random dataset by performing “interpolation”. In essence, if one part of an image is white and the other part of it is black, the area in between them should be gray; the closer it is to the white area the lighter the color in-between is, and the farther the darker. Finally, the algorithm will merge all interpolated datasets, meaning that. if one pixel is white on image 1, black on image 2, and black on image 3, the pixel on the resulting image will be dark gray. The combined image, though randomly generated, will keep some forms of patterns [12]. By adjusting the parameters of the Perlin Noise algorithm, the artists can leverage that pattern to make it appear as different textures like skin, woods, clothes, or rocks.

Figure 14: The Perlin Noise algorithm generates a granular wool texture [12].

The Frontier of CGI – Use of Machine Learning Models

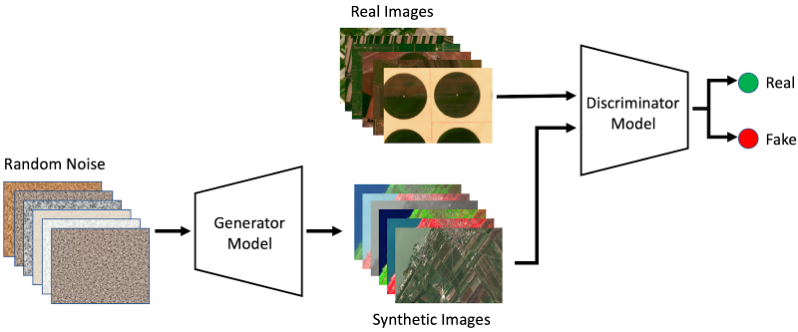

In the future, it will even be possible to have AI create CGI without any artists involved – the machine learning models can learn from existing photos and artworks and create similar ones. One of the most successful methods is the Generative Adversarial Networks (GAN).

In order to have AI draw CGI that tricks humans’ eyes, the AI itself must understand if an image looks real or not. Therefore, computer scientists design two adversarial machine learning models in GAN: one generator model works as an artist to draw images, and one discriminator model works as a judge to evaluate if an image looks real. When engineers train the GAN by feeding a bunch of artworks or photos, both the artist and the judge are evolving: The judge learns to distinguish between realistic and artifact images, and the artist learns how to create images that trick the judge. The training stops when neither the artist nor the judge can learn anything new.

Although the idea sounds cool, the machine learning methods have not been applied to the CGI industry until recently. Because both the artist and judge models are very often implemented using neural networks, the models are essentially black boxes to humans. Engineers in the past could not understand and control how a machine learning model creates an image. With the latest research breakthroughs in machine learning, however, engineers come up with techniques to train the GAN model not only to learn the images, but also to learn the styles of it, such as peoples’ ages, genders, and scenes’ lighting, color tones [13]. Now it’s possible to ask the machine learning model to create images according to desired styles by inputting a list of parameters;for example: “age 24”, “male”, “R10G100B10”. The model may then respond with a young man standing on top of green grassland. Therefore, even though we are still unclear about how neural networks perceive images, we could use them to draw CGI according to our needs.

The GAN model gives more possibility for machine learning technologies to play a greater role in the future CGI industry. The third question in CG vs. Photo quiz section has photos of real people and GAN-generated portraits. Refer back to them to see how skilled AI artists can be!

Figure 15: A diagram showing the process of a GAN model.

The generator is what we called the “artists” and the discriminator is what we called the “judge” [14].

Conclusion

Whenever our eyes are tricked by realistic CGI art, there are engineers and scientists coming up with sophisticated graphics techniques working behind the scene. These techniques encompass domains in arts, physics, mathematics, and recent machine learning technology. Although the CGI already looks indistinguishable to many of us, engineers are still contributing to the industry to make the techniques work better for different styles and become available for more applications. Let’s keep the expectations high and see how our eyes will be amazed by CGI in the future!

CG vs. Photo Challenge Answers

- The 2nd glass cup is CGI.

- The image on the right is the real Keanu Reeves from the film The Matrix Resurrection. The image on the left is CGI from the game trailer The Matrix Awakens.

- Images 2 and 3 are photos of real people and images 1 and 4 are generated by a machine learning model.

References

[1] Unreal Engine, “Siren Real-Time Performance | Project Spotlight | Unreal Engine” [Online]. Available: https://www.youtube.com/watch?v=9owTAISsvwk&ab_channel=UnrealEnginex

[2] ProductionCrate, “CAN YOU SPOT THE FAKE? (CGI vs. YOU).” [Online]. Available: https://www.youtube.com/watch?v=SSUNlCcNOzA.

[3] The Matrix Resurrections. Performed by Reeves, Keanu.

[4] Unreal Engine. The Matrix Awakens: An Unreal Engine 5 Experience.

[5] S. J. Nightingale and H. Farid, “AI-synthesized faces are indistinguishable from real faces and more trustworthy,” PNAS, Feb. 2022. [Online]. Available: https://www.pnas.org/doi/full/10.1073/pnas.2120481119.

[6] J. Sommers, “Josh Sommers’ CG Impossible Objects,” 2012, [Online]. Available: https://chaowlah.wordpress.com/2012/10/30/josh-sommers-cg-impossible-objects/.

[7] A. Appel, “Some techniques for shading machine renderings of solids,” Proceedings of the April 30–May 2, 1968, spring joint computer conference on – AFIPS ’68 (Spring), Apr. 1968.

[8] A. Marrs, P. Shirley, and I. Wald, Ray Tracing Gems II next generation real-time rendering with DXR, Vulkan, and Optix. New York, NY: Apress Open, 2021.

[9] J. W. Henrik, “Global Illumination Images by Henrik Wann Jensen”, Stanford Computer Graphics Laboratory, [Online]. Available: http://graphics.stanford.edu/~henrik/images/cbox.html

[10] Y. Lee, D. Terzopoulos, and K. Waters, “Realistic Modeling for Facial Animation.” SIGGRAPH ’95: Proceedings of the 22nd annual conference on Computer graphics and interactive techniques, September 1995, Pages 55–62

[11] The Matrix Revolutions. Performed by Weaving, Hugo

[12] T, Andrei. “Perlin noise in Real-time Computer Graphics,” 2008

[13] T. Karras, S. Laine, and T. Aila, “A style-based generator architecture for generative Adversarial Networks,” 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2019.

[14] A. Hamed, “Using Generative Adversarial Networks to Address Scarcity of Geospatial Training Data”, Oct. 2020, [Online]. Available: https://medium.com/radiant-earth-insights/using-generative-adversarial-networks-to-address-scarcity-of-geospatial-training-data-e61cacec986e