Abstract

Much of modern music production relies on the synthesizer. These are electronic music instruments that produce waveforms to create a rich variety of sound. This essay dives into how synthesizers are able to generate these sounds. We start from the very basics and discuss the science of sound, from its wave-like properties to different parameters that describe a soundwave. Then, we connect these concepts to the physical implementation of a synthesizer, discussing its many features and what can be done with them. Lastly, we introduce real life examples of its use in music.

Introduction

Many of us have heard some of the classic pop songs of the 1980s, like Madonna’s Holiday, The Humane League’s Don’t You Want Me, or Eurhythmic’s Sweet Dreams. Besides all of them being timeless classics, these songs share many more similarities. Each has a rhythmic beat, a catchy, quirky intro, and perhaps the most significant, each of these songs rely on the synthesizer in creating the music. A synthesizer, in simple terms, is a musical instrument that produces a variety of custom sounds, playing a critical role in music production in the modern day. Invented in the mid 20th century, synthesizers came into popularity in the 1980s and brought forth electronic music, synth-pop, techno, and so much more to the music world. These devices have very complex functionalities, but in this article, I break it down into the fundamentals of how they work, from basic sound principles to the crazy effects synthesizers can create.

Science of Sound

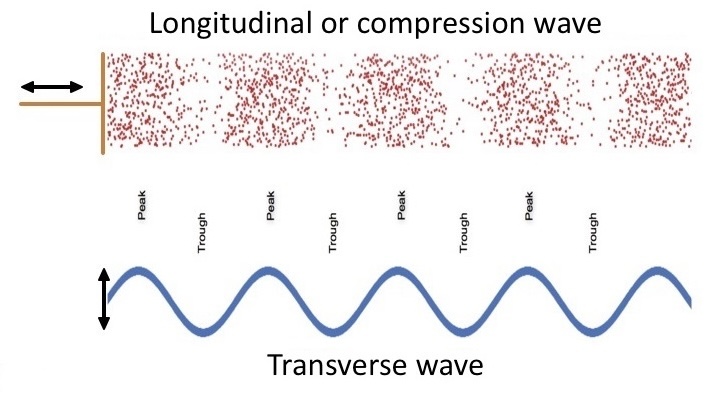

Before understanding how synthesizers function, it is important to know exactly what sound is. Imagine the ripples of surface waves on a pond when you throw a rock at it. Sound waves are very similar, except instead of the ripples traveling across the pond, it’s pressure waves, where the peaks of the wave represent higher pressure air and the troughs represent lower pressure air [1]. We can easily conceptualize air compression waves using a spring. A disturbance, which corresponds to the initial push of the spring, pushes a bunch of air molecules together (the scrunched up part of the spring), forming a region of high pressure. As the air molecules keep moving away from the disturbance, they propagate the high pressure pocket outwards, until it eventually reaches the ear. Traveling through the ear canal, compressed air eventually reaches the tympanic membrane, commonly known as the eardrum and causes it to vibrate [2]. Our brains pick up these vibrations and interpret them as sound.

Figure 1: Compression wave traveling across spring [1]

Short disturbances, such as a tap on the table, would form a simple pulse that is heard as a sudden, brief sound. However, most of the sounds we hear every day, any form of music or speech for instance, are not short pulses but rather more complex series and patterns of them. This can be illustrated pretty well through Figure 2, where we can see a series of regions of higher pressure across time (the more densely packed red dots). Additionally, this information can be better represented as some type of waveform, in this case a sinusoidal wave where peaks correspond to higher pressure and troughs correspond to lower pressure. Sinusoids are very helpful in allowing us to understand certain properties of sound that a synthesizer can then manipulate.

Figure 2: Sinusoidal Representation of Sound Wave [3]

In particular, we can look at amplitude and frequency. The amplitude of a wave is the height of the peaks. Higher amplitude waves carry more energy resulting in a loud sound [4]. Frequency, perhaps the more magical of the two properties, is the space between peaks, or how much time occurs between peaks. When peaks are closer together, we can say they occur at a greater frequency. Higher frequencies mean a note has a higher pitch. As a real life example, the middle C note has a frequency of 261 Hertz, which means 261 pulses hit our ear every second. Meanwhile, the A (a few notes above) has one of 440 Hertz.

One amazing thing about waves of different frequencies is that they can be added together to form a new wave of multiple frequencies. While a wave of only one frequency will sound like a single tone, or a musical note, a wave of multiple may sound like a musical chord (multiple notes put together), adding depth and texture to music. And with enough different waves combined together, we can theoretically produce any waveform, hence, any sound. We can also reverse the process and decompose any complex wave into simple waves of distinct frequencies. This happens through a mathematical formula called the Fourier Transform, which is an algorithm that searches for patterns of each frequency value and tells us how much of each frequency it was able to find [5]. A good analogy would be identifying different ingredients in a complicated dish by tuning into specific textures and flavors. The Fourier Transform is extremely useful as we can now isolate specific pitch ranges to modify, whether that means to make it louder or distort it in some way.

Figure 3: Different instruments playing Middle C [6]

Amplitude and frequency are the two most fundamental qualities of sound that synthesizers control, but there are also others– one of them being timbre. This can best be described as the color of a sound or even the shape of the wave (looking at the waveform), something unique to every instrument and musical device. Plucking a guitar will give a smooth, velvety sound while blowing a baritone or trumpet produces a rougher, more staticky one. These differences occur due to different amounts of high frequencies present, which adds higher tones to the sound, consequently changing the texture of the music [6]. Timbre is primarily determined by the specific shape and material of the instrument, as sound can bounce off or get absorbed by it in different ways.

Now, knowing what characteristics are used to describe sound, how can a synthesizer manipulate them?

Figure 4: Composition and Decomposition of sine waves combining sinusoids to create complex waves (left) and splitting a complex wave to its frequency components (right [5])

Using a synthesizer:

The science highlighted above describes sound from the most physical level, but in this section, we will discuss how we implement engineering tools through a synthesizer to achieve different sounds. Below is what a typical synthesizer looks like. Notice the top section, where there are an array of knobs for adjusting various characteristics and effects, such as the timbre or adding reverb, and the keyboard, where we can choose a note or notes of specific pitches.

Figure 5: A typical synthesizer [7]

Let’s first discuss how the keyboard generates sound. A note is produced whenever we hit a key on the keyboard. As mentioned earlier, sound is just repeating waves of some shape. A synthesizer typically uses an oscillator, which may be a piece of crystal that vibrates when energy is applied to it [8]. When we press a specific key on the keyboard, the synthesizer will apply a specific amount of energy to the oscillator, causing vibrations at a specific frequency, thus controlling the pitch of the note. An oscillator alone would produce a sinusoidal waveform, but when combined with other circuitry or hardware, the wave can be shaped to a triangle, square, or some other combination of these waves. This will change the timbre; we can get a hollow, mellow sound with a triangle wave while the square-shaped wave is much richer and buzzier. We can select these parameters under the “OSCILLATORS” section of the knobs. We can even combine multiple waveforms, as most synthesizers have at least 2 builtin oscillators (OSC1 and OSC2).

Figure 6: Analog vs Digital Sound wave [9]

Another way some synthesizers produce oscillating waves is by directly outputting a mathematical function describing discrete steps that represent the form of the wave instead of using an oscillator. This digital or discrete representation of a wave can be visualized as only having fixed-sized blocks to build a wave rather than being able to hand draw it. See Figure 5 for an illustration. These differences result in what we call digital synthesizers to be described as sounding less warm and less fuzzy than their counterparts, analog synthesizers [9]. On the other hand, they can achieve crisper music and more distorted-sounding effects. Changing the volume, frequency, and timbre on a digital synthesizer is simply a matter of adjusting the math function through its processor, which allows for a lot more creativity and variety of waveform. These differences occur internally, so from a user standpoint, it’s still the same knobs and parameters to be adjusted.

Now, we can look towards the “FILTER” section of the synthesizer for additional effects. One of the big ideas discussed in the frequency section of the article is that waveforms can be separated into different frequencies, allowing us to manipulate specific pitches of sound. This comes into practice in frequency filters. One may have heard ET by Katy Perry, where the bass drums boom in the foreground throughout the song. This can be achieved through low pass filtering, where lower frequencies or pitches are passed through the filter while higher ones are blocked. We can then amplify the filtered music to pronounce the bass part. On the opposite side is high pass filtering, where higher pitches are kept while lower frequencies are blocked [10]. This can be useful for removing undesirable, low humming noises in the background. Vocals and other higher pitch sounds can stand out more this way.

And like how waves can be added together, filters can be added together. For example, in the figure below, a low pass and a high pass are combined to form a band pass, only allowing frequencies between a certain range to pass. One instance of the use of bandpass filters to create a “telephone effect,” which filters for the range of the human voice to give the effect of speaking on a phone [11].

Figure 7: Combining Filters, created in MATLAB

Filters can be applied by adjusting the knobs in the “FILTER” section of a synthesizer. The most important parameter that can be adjusted is the cutoff frequency, which dictates at which pitch the sound is cut off. Increasing this will allow slightly higher pitches to be heard. The other knobs are used in something called filter enveloping, which essentially shapes or envelopes the cutoff frequency over time [12]. Most synthesizers use an ADSR envelope as the shape, which as seen in the figure, will first raise the cutoff frequency, decrease it, hold it constant, and lastly remove the filter. The duration of each phase of the envelope can be adjusted from the knobs. Generally this envelope will cause the general pitch of a note to abruptly increase then more gradually decrease, a sound of a short cry or a “waahh”. A longer attack can give the note a more sweeping sound, and a short decay may cause it to sound harsher. Further playing with these parameters can generate a variety of funky and interesting tones hence their popular use in electronic music.

Figure 8: ADSR Envelope, modified from [13]

In addition to enveloping by frequency, we can envelope by amplitude. Right below the “FILTER” section is the “AMPLITUDE” section of the synthesizer. Here, instead of the frequency cutoff being sculpted by an envelope, it’s the volume of the note being varied. So with the ADSR envelope, that would sound like an abrupt increase in loudness followed by a more gradual decrease. By increasing the time of the attack and release, the sound becomes drawn out, giving an almost eerie quality. Meanwhile when both attack and release are short, the sound becomes a very electronic-sounding short bop. Like with frequency enveloping, there is an almost infinite number of colors and flavors that can be produced by adjusting the envelope parameters.

Lastly, one commonly added effect on the synthesizer is reverb. In a big room such as a lecture hall or in an orchestral concert hall, one may hear a lot of echoes and reverberations of sound. This is because after the sound is produced, some sound waves will directly hit your ears (making up the first and loudest version of that sound), while others will first hit the wall and then your ears (making up later, quieter echoes). A synthesizer is able to reproduce this effect by outputting multiple copies of the note being played, decaying, in other words decreasing in volume, over time. Besides giving a sense of being in a big room, adding reverb can also provide warmth and layering in music.

Synthesizers in real life:

Now knowing how synthesizers work and generally how to use them, let’s take a look at 2 masters of the craft, Jacob Collier and Aphex Twins. Jacob Collier is a British artist, and one of the many things he’s known for is his use of a custom synthesizer, designed by an MIT engineer, to sing a whole choral at once [14]. Often performing it on “Can’t Help Falling in Love,” as he sings the first note in the line “wise men say…” multiple copies of his voice fade in, forming a blissful harmony to the main melody. How does this magic happen? His keyboard synthesizer takes inputs from both his mic as well as the keys. When Jacob Collier sings and plays the keys, the synthesizer will make multiple copies of his voice (inputted from the microphone) and shift its frequency, or pitch, to match the keyboard input. Thus, with just one person, one instrument, and some brilliant audio engineering, he is able to create the beautiful sound of a crowd.

Figure 9: Jacob Collier at the RISE music series in 2017 [14]

The second is Aphex Twin, or Richard James, who is very popular in the electronic music scene. James started fiddling with analog synthesizers from a very young age, taking them apart and adding his own circuits and modifications [15]. Though he is very secretive about his devices, he’s been known to make his own samplers, which is used to record and digitally play back sound [16]. This experience has played into his own music career as pretty much all of his songs are produced using customized equipment with unique flavors. Using his contraptions, James is able to forge specific effects that create very ambient and calm sounds, something he’s released multiple albums of and is very well known for. On the opposite side of musical feelings, he has a lot of upbeat, rhythmic works of experimental techno. Even with store-bought synthesizers, he learned to exploit certain quirks in their designs. For instance, he uses the Casio FZ-1 synthesizer to create glitch sound effects by corrupting parts of the memory by very quickly turning it on and off [16]. Thus, these two artists exemplify not just the full capabilities of a synthesizer, but almost the limitless creativity one can express through it.

Conclusion:

The next time a song appears on the radio, hear how certain sounds get mixed together, emphasized, resonated, and cut off. And take the time to appreciate the science and engineering that probably went into the song’s production. If you feel extra inspired and are curious about getting started in music production, there are synthesizers being sold for as low as $100 online. Or better yet, there are free softwares that mimic the function of synthesizers along with other music production tools called digital audio workstations (DAWs). Many of these, including GarageBand for Apple users and CakeWalk for Windows users, are even free to download.

With everything being said, technology weaves in beautifully with sound and music production, though it often goes unnoticed. This article serves as an example of one of the many ways that engineering enhances our lives and the world.

Further links for reading:

– https://link.springer.com/book/10.1007/978-3-031-14228-4

– https://www.izotope.com/en/learn/the-beginners-guide-to-synths-for-music-production.ht ml

– https://www.britannica.com/topic/music-recording/Birth-of-a-mass-medium

Further links for multimedia applications:

– https://www.youtube.com/watch?v=hiCICEx3Egk

– https://www.youtube.com/watch?v=pAZo7x83it4&pp=ygUVYXBoZXggdHdpbiBwcm9 kdWN0aW9u

– https://youtu.be/bW1hZ1ix-70

– https://www.youtube.com/watch?v=oXFiXWbj7Jc

Author contact:

Sonia Zhang | skzhang@usc.edu

Bibliography

[1] “The Science of Sound,” NASA Questt.

https://www3.nasa.gov/specials/Quesst/science-of-sound.html

[2] “How the Ear Works,” www.hopkinsmedicine.org.

https://www.hopkinsmedicine.org/health/conditions-and-diseases/how-the-ear-works#:~:text =The%20cochlea%20is%20filled%20with

[3] “Sound – wave interference,” Science Learning Hub,

https://www.sciencelearn.org.nz/resources/2816-sound-wave-interference (accessed Feb. 21, 2024).

[5] “Fast Fourier Transformation FFT – Basics,” www.nti-audio.com.

https://www.nti-audio.com/en/support/know-how/fast-fourier-transform-fft#:~:text=The%20 %22Fast%20Fourier%20Transform%22%20(

[4] D. Marshall, “Basic Digital Audio Signal Processing,” Cardiff University. https://users.cs.cf.ac.uk/Dave.Marshall/CM0268/PDF/07_CM0268_DSP.pdf [5] “Fast Fourier Transformation FFT – Basics,” www.nti-audio.com.

https://www.nti-audio.com/en/support/know-how/fast-fourier-transform-fft#:~:text=The%20 %22Fast%20Fourier%20Transform%22%20(

[6] “12.3 Sound Quality,” Eiu.edu, 2024.

https://ux1.eiu.edu/~cfadd/3050/Adventures/chapter_12/ch12_3.htm (accessed Jan. 24, 2024).

[8] Wesley, “Synthesizer Oscillators: Everything You Need To Know,” Controlfader, https://www.controlfader.com/blog/synthesizer-oscillators-explained (accessed Feb. 21, 2024).

[9] T. Frampton, “Analog vs. Digital: Exploring the pros and cons of both worlds in music production,” Mastering The Mix,

https://www.masteringthemix.com/blogs/learn/analog-vs-digital-exploring-the-pros-and-cons -of-both-worlds-in-music-production (accessed Feb. 21, 2024).

[10] Anderson, “Audio Filters,” Ohio State University.

https://www2.ece.ohio-state.edu/~anderson/Outreachfiles/AudioEqualizerPresentation.pdf

[11] “How to Create a Telephone Vocal Effect // Audiotent Music Production Tips,” Audiotent, Jan. 26, 2017.

https://www.audiotent.com/production-tips/how-to-create-telephone-vocal-effect/ [12] “An Introduction to Envelope Filters,” Hochstrasser Electronics.

https://www.hochstrasserelectronics.com/news/introductiontoenvelopefilters [13] “Envelope,” Making Music with Computers, https://jythonmusic.me/envelope/ (accessed Feb. 21, 2024).

[14] M. Edwards, “Show review: Jacob Collier,” The BIRN,

https://thebirn.com/show-review-jacob-collier/ (accessed Feb. 21, 2024). [15] F. Music, “Classic interview: Aphex Twin ,” MusicRadar,

https://www.musicradar.com/news/aphex-twin-interview-selected-ambient-works (accessed Feb. 21, 2024).

[16] S. Wilson, “7 pieces of gear that helped define Aphex Twin’s Pioneering Sound,” Fact Magazine,

https://www.factmag.com/2017/04/14/aphex-twin-gear-synths-samplers-drum-machines/ (accessed May 1, 2024).