Lawrence was a junior at USC studying to be an Electrical Engineer. He is also a former chess enthusiast.

Computer chess software available today is a staple of modern computing distractions. Few may recognize the rich history behind the development of that technology. In a revolutionary chess tournament in 1997, the chess world champion was defeated by an IBM supercomputer called Deep Blue, shocking the media and the general public. To the artificial intelligence community, however, this was a long time coming. After almost fifty years of developing adequate computing technology and formulating sufficient chess playing strategies, computer scientists were finally able to solve the “chess problem” and defeat the best human player. The specific search algorithm used was revolutionary for its time, and Deep Blue’s victory served as a defining moment in the history of the field of artificial intelligence. However, some doubts have been raised regarding computer chess’s relevance to the field of artificial intelligence. Strong arguments have been made for both sides by prominent experts in the fields, and the fate of future computer chess studies hangs in the balance.

Introduction

After 50 years of research and development in conjunction with artificial intelligence, the study of computer chess culminated during two matches between Deep Blue, a chess supercomputer funded by IBM, and the Chess World Champion Garry Kasparov. The 1996 and 1997 matches were media sensations, heavily promoted as a battle of wits between man and machine. When the machine finally prevailed in 1997, the artificial intelligence field and scientific community marveled at their own triumph. However, recent experts have disagreed with the predictions of their predecessors, which suggested that such an event would herald in an era of intelligent machines, pointing to several issues of comparing chess to intellect. While continued research on artificial intelligence is necessary before it becomes an everyday reality, Deep Blue’s victory over Garry Kasparov in 1997 still remains an impressive engineering feat and a foundation for current achievements in the field.

History: From Turing’s Paper Machine to Deep Blue

Computer chess has been associated with the field of artificial intelligence ever since the emergence of computer science in the mid-20th century. Many of the biggest names in both fields, such as Alan Turing, a mathematician whom many credit with founding modern computer science; John McCarthy, who helped coin the term “artificial intelligence”; and Claude Shannon, a pioneer in Boolean logic, supported the use of chess as proper starting point for the development of intellect [1]. Undeterred by the lack of technology and establishment of a precedence at the time, Turing sought to support his theory and effectively wrote an algorithm for the first chess program. Unfortunately, the program, which was put on paper and implemented by Turing himself, was perhaps better visualized than implemented, as a colleague soon easily defeated the program. Despite this unceremonious introduction, chess programs would soon flourish, gaining the recognition as a standard for research for many computer scientists [2].

Many early programmers devoted a particularly large amount of time and effort to chess because they perceived the game as a unique challenge that would provide a breakthrough for artificial intelligence. These researchers saw in the structure of chess a simplified model for large-scale problem solving. Players in chess only have the primary task of checkmating the opponent’s king. In addition, several other constraints inherent to the game, such as the relatively small board and limited amount of pieces with distinct movement patterns, helped computer scientists define specific parameters within their programs. In terms of human comparison, the already existing hierarchy of rated chess players throughout the world gave engineers a scale with which to easily and accurately measure the success of their machine.

After several decades of research yielded little progress, it is surprising that Deep Blue, which would arguably become the ultimate champion of computer chess, began with a chip that was built in six months by a Carnegie Mellon graduate student and an inexperienced chess player. In 1985, while doing secondary research for another professor, Feng-Hsiung Hsu discovered a method that could reduce the work done by the chess machine’s move generator by simplifying the program’s silicon chip design from a maximum of sixty-four distinct chips used by contemporary machines down to just one. While looking over the design of sixty-four chips, he discovered that the extensive amount of wiring could easily be reduced, decreasing the delay of the design and saving time and precious space on the chip [3]. After further studying the design sketches, Hsu discovered that another large portion of the chip was redundant and could therefore be excised completely. Because he was only removing useless components, Hsu’s new, reduced design did not lose the speed expected with his reduction in size. In fact, Hsu’s proposal actually suggested a theoretical gain of speed by a factor of twenty due to the reduced complexity of his new chip. The single chip design that Hsu developed would evolve over time to form the backbone of Deep Blue’s hardware (see Fig. 1).

Compiling Chess Strategy

The main focus of artificial intelligence research in chess is the use of strategy that defines the game. Like the difference in difficulty between reading and appreciating good literature, the greatest obstacle for artificial intelligence has never been making the observations for the current situation, but understanding and analyzing the implications that this information brings. Machines are still hamstrung today by the lack of intuition that humans possess to make assumptions and connections without much cognitive effort. Chess strategy offers a similar problem with analyzing data, except within much smaller confines. In chess, each move must be analyzed and made beyond the current state of the game in mind, because a slight improvement in the short term may not translate into a victory in the long run. The challenge of processing information with greater depth is fundamental to the study of artificial intelligence, and chess played an important introductory role in implementing solutions to this problem [4].

Because this challenge held such a significant role in the artificial intelligence community, researchers in the field were divided on finding the appropriate approach. The debate began as early as the 1950s, when Claude Shannon, the Boolean logic pioneer, introduced two different search algorithms to implement in computer chess: selective search and brute force search. The term ” search algorithm” was used to describe program that attempted to find the best possible play per move in chess based on the current situation and “numerical weights,” which are numbers attributed for position and pieces that can be used to estimate the chances of winning based on the features of the position. The result of the debate among researchers as to which algorithm was superior would parlay into future artificial intelligence research.

Some experts, especially the early researchers, argued that the most logical way to simulate intelligence was to program machines to literally think and evaluate like humans do. This form of analysis was introduced to computer chess theory as selective search, which sacrificed a bit of processing speed to eliminate the most obvious poor move choices, like humans do naturally. However, the problem with this method lies in the fact that computers are built to run differently from humans. As stated earlier, machines have difficulty making even the easiest of connections. Their programs are driven line by line, or run by a limited source code for specific situations. Because chess, a small scale representation of reality, has so many distinct game possibilities, recognizing all of these distinct situations is still impossible for a machine today, and impractical for real life applications.

Other experts, particularly the later researchers and the developers of Deep Blue, believed that the field should abandon their attempts to translate common sense and instead take advantage of the computer’s immense processing speed and memory space to perform the same, relatively simple calculations repeatedly in order to simulate intelligence. This computer chess theory was embodied by the brute force algorithm, which, like the name suggests, quickly and thoroughly pursues every possible move combination to find the best one. Unlike selective search, brute force is only limited technologically, or by the hardware capabilities required to sift through the possible moves, because its code, or source program, does not have to acknowledge broad variations. However, as the computer is allowed to search deeper into future possible moves to determine the correct move, the technical requirements increase exponentially, limiting how far or fast a machine can search.

In actual search algorithms implemented in chess machines, a program rarely consists solely of brute force or solely selective search. A basic example of this can be seen in alpha-beta pruning, a selective search-like optimization that vastly reduced the time required by the basic brute force search algorithm without compromising the final result. Alpha-beta pruning eliminated possible future move sets from analysis if they were proven to be either equal or worse than another move already searched. The producers of Deep Blue took this algorithm one step further and employed an innovative theory known as “singular extensions” [5].

The singular extensions theory used the standard alpha-beta optimization, but throughout the normal pruning process, it also searched for an obvious choice. If one distinct move path’s weight is better than all of the other choices by an amount set by the programmer, then this move path is searched and analyzed beyond the standard limit, or horizon. The results of this change were clear: during one simulation, Chiptest, an ancestor of Deep Blue that first implemented both singular extensions and Hsu’s initial chip design, cut the required search times and depth by extraordinary levels, from a nineteen-move-deep search to eight and from thousands of hours to sixty-five seconds. This increase of efficiency convinced many artificial intelligence scholars to follow singular extensions and its more brute force-heavy searching methodology, especially because the algorithm was highly optimized by a slight use of selective search.

Adaptive Issues

With its powerful hardware system and innovative evaluation function, Deep Blue I arrived at the six game match in 1996 to face Garry Kasparov, the world champion of chess at the time (see Fig. 2). To the surprise of many, Deep Blue began that match with an unequivocal win, the first of a machine over a world champion. Even Kasparov expressed his amazement at the incredible technology of the machine to the media, commenting that, at times, its processing power appeared divine. Despite that historic first game, however, the world champion refused to be intimidated by the machine, and Kasparov demonstrated why he was considered the premier chess player of the world. Although clearly shaken by the early loss, Kasparov rebounded capably, eventually winning the entire match by a score of four games to two [6].

After the first four games that left the match even at two apiece, Kasparov discovered and exploited a weakness that was inherent to the first Deep Blue and most chess machines in general: the inability to adapt. During the first four games of the match, the world champion played the style he had perfected against human opponents in hundreds of games, employing his well-known game openings to begin each game. In response, the machine also played well, because in addition to having studied most of Kasparov’s games, it had also been designed and programmed specifically to face his style of play. Beginning with the fifth game, however, Kasparov changed his strategy and played an opening that he had used sparingly in the past. This critical decision left Deep Blue without the majority of its notes on Kasparov to aid its decision-making process. For the rest of the match, the machine had to rely on its collected history of old games and its search algorithm, which had slight malfunctions of its own. Although both players were treading in unfamiliar territory and the machine still put forth a valiant effort throughout the final two games, Kasparov proceeded to take down Deep Blue without much trouble [7].

The problem that Deep Blue faced while trying to adjust to Kasparov’s changing play is common in the artificial intelligence field. As Dr. William Clocksin addresses in his 2003 paper titled, “Artificial Intelligence and the Future,” much of the intelligent technology, even today, is incapable of making the simplest of adjustments on its own. His arguments produce a valid point: although machines have ever-increasing processing and memory capabilities, the technology behind them is still exclusively designed to perform a certain task in order to maximize efficiency and reduce costs. Therefore, most of the adjustments and tuning that occurs within a machine depend on human input. This chain of dependence can be seen clearly during the reconstruction phase of Deep Blue between the 1996 and 1997 matches, where the programmers worked hard to make the chess monolith easier to adjust between games, instead of more adaptive on its own [8].

Accepting Victory

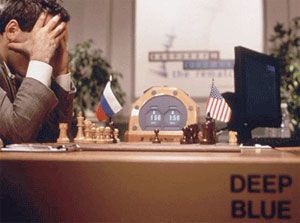

After many updates and a boost to its chess knowledge to combat the strategies used in the previous match, a rebuilt Deep Blue emerged victorious over Garry Kasparov in their 1997 rematch. However, as noted in many different analyses of the game, while the world champion may not have been outwitted by the machine, the struggles he had adjusting to his computer opponent were his eventual downfall (see Fig. 3).

For example, Kasparov’s intimidating demeanor had no effect on the machine because Deep Blue only recognized and analyzed the situation on the board. In addition to this lack of psychological pressure placed on the computer, the superficial threats on the board also had little effect on Deep Blue’s measurement of the situation. This grew evident when the supercomputer was able to find a draw during a key game just as Kasparov was able to promote his pawn.

On the other side, Kasparov displayed some reverence to the computer, as seen in the second game where Deep Blue had won. Further analysis of the situation displayed that a complex progression of moves would have allowed Kasparov to force a draw, but he instead chose to resign.

Opposing Analysis

Most of the artificial intelligence community believed that Deep Blue’s victory was somewhat of a step in the right direction. In a review for a book written about the match, John McCarthy, the previously mentioned founder and professor of artificial intelligence, argued for a constriction of the technological components behind computer chess to focus on the methodology theories instead of technological capabilities. A fellow professor at Stanford, Edward Feigenbaum, expressed his surprise that Deep Blue had successfully broken the trend of the field, which was then shifting from the use of brute force to selective search right before Hsu developed his chip. Both McCarthy and Dr. Feigenbaum implied correctly that although there was impressive engineering and ingenuity behind Deep Blue, the foundation of the chess machine’s immense skill lay in its deep memory and quick processing power. Both professors also recommended that further resources be invested into studying computer chess, but to have the field’s focus shift to finding alternative solutions to the pure calculatory method used in Deep Blue.

Despite concerns about technology’s role in artificial intelligence, many other researchers see potential with the increasing rate of technology. Many computer scientists, including Hsu and some of the Deep Blue team, had trouble recognizing the true applications and capabilities of a common sense-driven machine. In fact, the true breakthroughs of in the development of Deep Blue came when the team turned away from the selective search approach that was popular at the time. Many researchers believe that currently the application of brute force search, even when optimized with singular extensions, is too grand of a scale for real life. However, others believe that brute force systems may be realistic in the future. Their continual faith in brute force systems lies in Moore’s Law, which states that the amount of transistors that engineers can place on silicon chips will double every one and a half years. Silicon chip technology has followed this trend line, beginning with chips with transistors numbering in the hundreds in the 1970s to a recent Intel chip that contains two billion transistors, with individual transistors just forty-five nanometers wide [9]. If technology continues to improve at this rate, which is still a debatable question since current standards appear to challenge physical limits, then a brute force-driven artificial intelligence system could be a decade or two away.

Although the Deep Blue achievement certainly broadened a lot of researchers’ optimism, several members of the field still question the connection between artificial intelligence and chess. Clocksin expressed his doubts in his article, commenting that all of the effort placed into chess has only taught researchers about the limits of the comparison. His opinion that game logic has little to do with artificial intelligence is further fueled by Hsu’s initial opinion that his project had minimal connections to artificial intelligence. Clearly, some computer scientists feel that computer chess research has been fully developed, and that the field of artificial intelligence should move beyond it. Nevertheless, even the most stubborn artificial intelligence expert recognizes Deep Blue as a monumental achievement for the field, if only as a starting point.

Epilogue

In terms of what it set out to accomplish, Deep Blue is a success. The project began as a dream in the 1980s to defeat the World Champion of Chess, which was then realized in 1997. IBM quickly refused Garry Kasparov’s demand for a rematch, and since then, Kasparov’s successor, Vladimir Krammik, has also lost to a chess machine. This 2006 defeat declared a de facto end to world champion-machine matches according to Monty Newborn, a professor at the McGill University in Montreal. Deep Blue itself was dismantled and kept only as a trophy of its tremendous achievement. The study of computer chess and the success of Deep Blue serve as defining moments in the history in artificial intelligence, and the implementation of smart search chess algorithms demonstrates true ingenuity in computer science.

References

-

- [1] “Claude Shannon.” Encyclopædia Britannica. [On-line]. Available: http://www.britannica.com/EBchecked/topic/538577/Claude-Shannon [Mar. 01, 2009].

- [2] M. Krol. (1999, Mar.). “Have we witnessed a real-life Turing Test?” Computer. [On-line]. pp. 27-30. Available: http://ieeexplore.ieee.org/stamp/stamp.jsp?arnumber=00751325 [Feb. 23, 2009].

- [3] F. H. Hsu. Behind Deep Blue: Building the Computer that Defeated the World Champion. Princeton, New Jersey: Princeton University Press, 2002.

- [4] F. Friedel. (2002, June). “A Short History on Computer Chess.” Daily Chess Columns. ChessBase. [On-line]. Available: http://www.chessbase.com/columns/column.asp?pid=102 [Feb. 23, 2009].

- [5] T. Anantharaman et al. “Singular extensions:Adding selectivity to brute-force searching.” Artificial Intelligence,vol. 43, pp. 99-109, 1990.

- [6] J. McCarthy. (1997, June). “Kasparov Versus Deep Blue: Computer Chess Comes of Age.” Science. [On-line]. Vol. 276.n5318, pp. 1518. Expanded Academic ASAP. Gale. University of Southern California. Available: http://find.galegroup.com/itx/start.do?prodId=EAIM [Feb. 20, 2009].

- [7] D. L. McClain. (2006, Dec.). “Once Again, Machine Beats Human Champion at Chess,” New York Times. [On-line]. Available: http://www.nytimes.com/2006/12/05/crosswords/chess/05cnd-chess.html?_r=1 [Feb. 23, 2009].

- [8] B. Pandolfini. “Kasparov and Deep Blue: The historic chess match between man and machine.” New York: Simon & Schuster, 1997.

- [9] J. Fildes. (2008, Feb.). “Chips pass two billion milestone.” BBC News. [On-line]. Available: http://news.bbc.co.uk/1/hi/technology/7223145.stm [May 4, 2009].

- [10] W. F. Clocksin. “Artificial Intelligence and the Future.” Philosophical Transactions: Mathematical, Physical and Engineering Sciences, vol. 361-180915, pp. 1721-1748. Available: http://www.jstor.org/stable/3559219, 2003.[Feb. 23, 2009].

- [11] E. Feigenbaum. “The History of Computer Chess: An AI Perspective.” Computer History Museum. [On-line]. Available: http://www.computerhistory.org/press/history-of-computer-chess-an-ai-perspective.html [Mar. 01, 2009].

- [12] J. McCarthy and R. J. Miller. “A Distinguished Lecture on Computer Science.” Internet: http://www.cs.toronto.edu/colloq/2000/mccarthy.html, Apr. 30, 2001 [Mar. 2, 2009].