The brain is our body’s information processing center. It controls everything that we do, making us intelligent, conscious, and alive. The brain is made up of over 100 billion neurons. The neuron, or nerve cell, is a type of cell responsible for the body’s gathering, processing, and transmission of information. Much like a unit of computer memory, a neuron has inputs, outputs, and transmission lines. Advanced interdisciplinary engineers, proficient in both biotechnology and computer engineering, are modeling these neurons and the brain using computers. Their hope is to analyze the brain in enough detail that they can reverse-engineer it and recreate it in silicon. By creating neurons from non-biological components, engineers may be able to enhance both machine intelligence and human capability.

Introduction

The human brain is made up of over 100 billion neurons, and there may be that many more in the rest of the nervous system [1]. The importance of these cells for our species is undoubted, yet we know very little about their functioning en masse compared to other kinds of cells in the body. What follows is a simple biological introduction to the nerve cell, followed by an explanation of how advances in engineering will soon integrate the disparate fields of computer science, electrical engineering, and biology to introduce a higher level of brain function in a process called neural computing.

What is a neuron?

Neurons are responsible for the body’s gathering, processing, and transmission of information (see Fig. 1). All the neurons in the body make up the nervous system. To understand the neuron better, it may help to study the basic function of the brain, which is a part of the central nervous system. As explained by Carlson in Foundations of Physiological Psychology, an entry-level neuroscience text, “the brain is the organ that moves the muscles. That may sound simplistic, but ultimately, movement – or more accurately, behavior – is the primary function of the nervous system” [1]. This is called a functional analysis of the brain: the nervous system takes in sensory information about its surroundings from the peripheral nervous system, processes the information, and then transmits information to muscles, coordinating an output based on the inputs. All body functions, from breathing to speech to digestion, can be analyzed in this way.

In a similar fashion, a single nerve cell can be regarded as an input-output system. It has four functional parts.

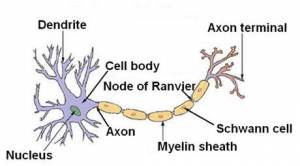

Dendrites are tree-like structures that accept input from other neurons. The input is then analyzed in the cell body, or soma. Outputs are sent down the axon, a long stalk for transmitting the information over distance. Finally, the information leaves the nerve through synapses, which attach to other nerve cells. (See Fig. 2 for a depiction of a typical neuron). In the central nervous system, an average neuron has tens of thousands of synapse connections on its dendrites. Although the precise nature of the organization of neurons in the brain is not completely understood, it is known that these large networks of connections are what give the brain its power and versatility.

Analog vs. digital

At the risk of comparing the brain to a stereo system, a part of neuronal function is an analog to digital conversion. The inputs received by the neuron (via the dendrites) are chemical in nature. The amount of chemicals received by certain neurons is an analog quantity – that is to say, it cannot be measured with absolute precision; it is not discrete. Some of the chemicals are excitatory: their presence makes the neuron more likely to act. Others are inhibitory; they quiet the neuron if present. On the other hand, the output of a neuron is binary – either the neuron fires with a burst of electricity, or it does not. There is no in-between. Therefore, the single neuron is performing a calculation in its cell body that converts a large amount of analog data (recall that there can be tens of thousands of connections) to a single digital signal. Perhaps the audio processor analogy is not so far-fetched; the neuronal process sounds strikingly similar to a conversion from an analog medium such as a vinyl record to a digital one such as a compact disc.

Enter computation

There have been many analogies proposed for the brain over the course of human history; the best analogy at any given time is almost always the fashionable technology of that time. Rene Descartes was one of the first to compare the brain to a machine. He used the hydraulically operated statues in the royal gardens of Saint-Germain, France [2]. Much later, Thorndike and Woodworth would propose that the mind is “a kind of [telephone] switch board with innumerable wires connecting discrete points” [3]. Today’s fashionable analogy, put forth in 1958 by John von Neumann, is that the brain could be a general-purpose computer, similar to the computers we use everyday. Recently, it has been understood that in contrast to today’s computers, the brain does not always work algorithmically–that is, it does not always follow a well-defined step-by-step process to solve problems.

Advanced interdisciplinary engineers, proficient in both biotechnology and computer engineering, are modeling the brain using computers. Their hope is to analyze the brain in enough detail that they can reverse-engineer it and recreate it in silicon. The premise is this: the organization and structure of the brain are what make it so proficient at intelligent tasks. The biological makeup of the neuron is actually detrimental to advancing intelligence, as recent computer components can operate more than a million times faster. So, if we can create (or simulate) a neuron in silicon, we could try to connect billions of these virtual neurons together to create machine-based neural networks that are much faster than their biological analogues [1]. By creating neurons from non-biological components, engineers may be able to enhance both machine intelligence and human capability.

Pattern recognition

The most common use for neural technology today is pattern recognition applications. These include software that performs speech recognition, text scanning, and identification. Pattern recognition is what humans do best; in fact, it comprises the bulk of our neural circuitry [1]. One example of pattern recognition in computers is facial recognition. Kurzweil writes that “recognizing human faces has long been thought to be an impressive human task beyond the capabilities of a computer, yet there are now automated check-cashing machines, using neural net software developed by a small New England company called Miros, that verify the identity of the customer by recognizing his or her face” [1]. In this instance, Miros engineers are working to enable more efficient security. A task that once required a human to perform reliably is now entrusted to a machine.

Another example of successful engineering in neural networks is optical character recognition, or OCR. Today, processing firms use traditional image scanners with OCR software to produce digital versions of printed text. Although older, algorithmic versions of OCR software were often inaccurate and hard to use, current software “comes very close to human performance in identifying sloppily handwritten print” [1]. This kind of software is being used to digitize millions of books worldwide. Much of the research for this article was done with access to USC’s electronic reference library, which spans every academic topic and has full-text archives of hundreds of technical periodicals and thousands of books. The size of the online collection grows every day, as new resources are scanned and “read” by the software.

Where do we go from here?

The next step is to integrate our shiny new synthetic neural circuits into the biological circuits from which they were copied. Remember, those silicon neurons are more than a million times faster than the old biological neurons, so this integration could be a great advancement for humans. Although this may sound like a science fiction fantasy, this “neural engineering” task is well underway. Researchers with USC’s Neural Computation program have recently developed an artificial hippocampus (a section of the brain devoted to sorting out new memories and emotion). The silicon chip duplicates the function of a normal hippocampus, and is intended to be used for patients with brain damage in that area. They expect to begin testing the device soon.