In Spring 2008, Matthew was a sophomore at USC’s Viterbi School of Engineering, and College of Letters, Arts and Sciences set to graduate with a degree in Computer Engineering and Computer Science, and East Asian Languages and Cultures in May of 2010. After graduation, he planned to pursue a graduate degree in Computer Science.

The microprocessor can be considered one of the greatest inventions of the twentieth century, placing an entire room of computer equipment with a single chip. The fundamental operations of a microprocessor are basic, yet it has allowed so much to be accomplished. As transistors, the building blocks of microprocessors, approach their minimum size limits, creative ways to continue increasing computing power have emerged. These include technologies such as pipelining and the multi-core paradigm. The microprocessor has proven to be a versatile invention, branching out into numerous fields outside the personal computer.

Introduction

Microprocessors have revolutionized the world, especially in the area of electronics. A myriad of modern items ranging from cell phones to digital watches, elevators to washing machines contain microprocessors. It is incredible that, just a few decades ago, the microprocessor did not even exist, and yet today it can be found almost anywhere.

What is a “Microprocessor”?

A microprocessor is essentially an entire basic computer fitted on a single chip [1]. Sure, computers purchased today usually come with peripherals like monitors, hard drives, and DVD drives, but the most important component of the system is the microprocessor. You most likely have heard of companies such as Intel or AMD, and probably even have some version of one of their microprocessors inside your desktop or laptop, but what is this device? The microprocessor’s job is essentially to do all the calculations and computations inside that system. At a fundamental level, computers accomplish these tasks by controlling the flow of electric current through a circuit.

From Tubes to Transistors

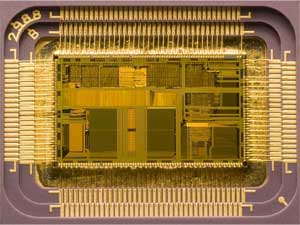

Basic computers that could do the same thing as the microprocessor have been around for a long time, but it wasn’t until the invention of the transistor that microprocessors could be made. A transistor is essentially a switching device that either allows, or does not allow electric current to flow, and many transistors working together make up the processor [2]. Before transistors, computers were gigantic machines taking up entire rooms. Instead of using transistors as switching devices, they used large and inefficient “vacuum tubes,” [2]. The invention of the microprocessor was so amazing because it allowed for one chip to replace entire rooms full of computers (Fig. 1).

The first microprocessor was the Intel 4004 created in 1971 and was made primarily for use in calculators [3]. By today’s standards, this microprocessor would widely be considered inadequate, but at the time it was state-of-the-art. The 4-bit processor was made up of 2,300 transistors, and had a clock speed of 108 kHz [4]. Keep in mind that modern processors are 64 bit, are made up of billions of transistors, and have clock speeds thousands of times higher.

Where are Microprocessors Used?

First there’s the simple answer of inside your computer. You’ve probably heard of the Intel “Pentium” series, as most consumer computers utilize some version of Intel microprocessors. Besides the obvious, microprocessors are used in many other places. In fact, it is estimated that 98% of microprocessors are not used in personal computers!

The vast majority of microprocessors can be found in embedded systems, which are a “combination of computer hardware and software, and perhaps additional mechanical or other parts, designed to perform a dedicated function” and they are found practically everywhere [5]. Cell phones, mp3 players, video game consoles, washing machines, microwaves, cars, televisions, and others all contain some type of embedded system with a microprocessor inside. The impact of the invention of the microprocessor on the world can be seen in the fact that practically every modern electronic device is an example of an embedded system [5].

How Microprocessors Work

Most modern microprocessors are created using the “von Neumann Architecture” which means that they only perform three tasks – fetch, decode, and execute instructions [3]. “Fetch” means the computer can get an instruction stored in binary form from some type of memory such as Random Access Memory (RAM), where a list of instructions and data are stored.

The computer then “decodes” these instructions, determining what the instruction is asking it to do by examining the order and sequence of a series of 0’s and 1’s, a purely binary form of data. From this representation, the binary values need to be decoded so as to carry out the proper function. Instructions usually tend to have something called an “opcode” in binary that tells the processor what type of instruction it is, along with other necessary parameters [2].

When the microprocessor knows what needs to be done from decoding the binary instruction, it computes or “executes” the instruction in the Arithmetic Logic Unit or ALU. As its name implies, the ALU is responsible for two types of operation – arithmetic and logic. Everything that computers are now capable of doing, from computing the millionth digit of Pi to rendering the intense graphics of modern video games, derives from these basic building blocks [6]. However, there is one more fundamental component of the processor that is vital to its operation, and that is what is known as the “clock.”

The clock produces a regular “clock signal” that the processor executes at every clock cycle. The purpose of the clock is “to organize the movement of information inside the computer so that each component has time to work, and is measured in Hertz, or cycles per second” [7]. It basically synchronizes processor operations; without the clock, data flow within the processor would be a mess. Within a processor, certain operations compute faster than others. The clock makes sure that each computation has enough time to finish before moving on to the next in order to keep the data flow synchronous. As clock speeds, among other things, determine how fast the processor can execute instructions, they can often be an adequate indicator of overall speed. Microprocessors that have faster clocks can often do more computations per second than those with slower clocks; modern processors run so fast that their speeds are measured in Gigahertz, or billions of cycles per second.

Basic Parts of a Microprocessor

The microprocessor contains two fundamental parts, the “Control Unit” and the “Arithmetic Logic Unit” [6].

The Control Unit directs data flow in the computer. It contains the decoder that interprets instructions, telling the processor what it needs to do (Fig. 2). Also within the Control Unit are registers: high-speed, temporary storage spaces for holding instructions and data that the processor already fetched. There are also other specialized registers such as the “Program Counter” which is used to hold the address in memory of the next instruction to be performed [6].

The Arithmetic Logic Unit, or ALU, performs the computations necessary for the computer to function. There are two main types of computations that it is capable of doing – arithmetic and logic. The arithmetic portion can do various calculations such as addition, subtraction, multiplication, and division. Similarly, the logic portion does comparisons such as equal or not equal [6].

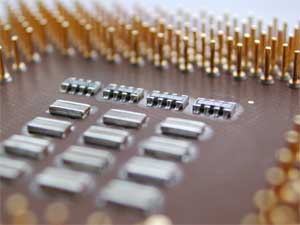

For speed optimization purposes, most microprocessors also include what is called “cache memory.” Recall that the processor needs to fetch data from main memory before it can decode and execute the instructions. The problem with setup is that getting data from main memory is relatively slow because the memory is physically farther from the processor. Main memory is also much larger than the microprocessor and is made of slower parts, making it more tedious to search through [7]. This is where cache memory comes in. Cache memory is similar to RAM, but it is faster, smaller, and a part of the microprocessor. The main purpose of cache memory is speed. Data in the cache memory can be accessed faster than data in RAM because cache memory is physically closer to the processor core. The memory is also smaller, and is made of more advanced and expensive material [7]. So, by putting data that is accessed frequently in cache memory, data access time will decrease, increasing overall performance.

Modern Microprocessor Architecture

Modern processors have definitely come a long way. They are exponentially faster, smaller, and more efficient than their predecessors. Today’s processors have clocks speeds in the order of billions of cycles per second and can fit millions of transistors on a single chip. In contrast, not long ago, clock speeds were only in the thousands of cycles per second, and only a couple thousand transistors could fit onto a chip.

A few years can make a big difference in the world of processors, and Moore’s law accurately depicts this. About 40 years ago Gordon Moore, co-founder of Intel, stated that the transistor density on a chip roughly doubles every two years [3]. Remarkably, the processor industry has largely followed this trend.

Transistor count is directly related to computational power, and continuous growth in transistor density has made microprocessors significantly more powerful. Until recently, increasing the processor’s capabilities was mainly the result of shrinking transistors and packing more and more of them onto a chip. The problem is that transistors and components on a chip have become so small that getting any smaller will soon become physically impossible [3]. To overcome this challenge, some processor manufacturing companies have turned to more creative designs to solve the problem.

One technique that modern microprocessors use for increasing efficiency is known as “pipelining.” Pipelining implements a “technique in which multiple instructions are overlapped in execution, much like an assembly line” [2]. Pipelining greatly speeds up processor performance by taking advantage of tasks that can be done in parallel. Recall that processors can only fetch, decode, and execute; without pipelining, a processor would have to fetch an instruction, decode it, and execute it, before moving on to the next instruction.

Processors can function seamlessly without pipelining, but notice that there is significant idle time between the stages, which is inefficient. With pipelining, the processor can fetch the first instruction, and then decode it while simultaneously fetching the second instruction. Finally the processor can execute the first instruction while decoding the second and fetching the third instruction. The pipelining method increases efficiency, and therefore speed, by using parts of the processor that are traditionally not in use to get a head start on the next task. While pipelining does not increase the speed of just one fetch-decode-execute cycle, it does increase the speed of multiple cycles, increasing the throughput of a system [2].

Another technique recently used to increase processor performance is known as parallel processing. “Multi-core” processors are an example of this [6]. What makes multi-core processors so beneficial is that they can split problems into smaller, independent subproblems that can be run at the same time, similar to the concept of pipelining. It is the same as having multiple processes team up to work on a single problem. Many processor cores, each capable of pipelining, can further divide tasks into smaller subproblems to greatly increase speed. Depending on the desired processor architecture, the division of the program is a task left to the programmer, compiler, and hardware.

There are more benefits to multi-core design than just speed increases, however. While speed is important, processor designers also try to reduce power usage and heat. Multi-core processors allow a reduction in transistor density from single core processors because they work more efficiently. Since two cores can divide and work on a single task, the clock speed, which affects heat dissipation through frequency of movement, is lower than that of a single-core processor doing equal computation in an equal amount of time. Lower transistor density and clock speeds mean less power consumption, and less heat dissipation, which are both beneficial to the field of microprocessor design [8].

Conclusion

Computing technology has come a long way since the days of punch cards or bulky vacuum tubes. The exponential growth of microprocessor transistor density outlined by Moore’s law shows how, in just a few years, technology can rapidly accelerate to new levels. Although the microprocessor is a relatively young invention, it has already saturated the electronics market and has proven essential to modern conveniences. If microprocessor technology continues at this rate, the future will assuredly bring drastic advances in computing power and efficiency.

References

-

- [1] Paul E. Ceruzzi. A HISTORY OF Modern Computing, second edition. The MIT Press: Cambridge, MA, 2003.

- [2] David A. Patterson and John L. Hennessy. Computer Organization and Design: The Hardware/Software Interface.Burlington, MA: Morgan Kaufmann Publishers, 2007.

- [3] Eric G. Swedin and David L. Ferro. Computers: The Life Story of a Technology. Westport, CT: Greenwood Press, 2005.

- [4] W. Warner. “Great moments in microprocessor history: The history of the micro from the vacuum tube to today’s dual-core multithreaded madness.” IBM. Internet: http://www.ibm.com/developerworks/library/pa-microhist.html?ca=dgr-mw08MicroHistory. 22 Dec. 2004 [1 April 2008].

- [5] Michael Barr. “Embedded Systems Glossary.” Netrino: The Embedded Systems Experts. Internet: http://www.netrino.com/Embedded-Systems/Glossary, 1 April 2008.

- [6] Larry Long and Nancy Long. Computers: Information Technology in Perspective. Upper Saddle River, NJ: Prentice Hall, 2002.

- [7] Jim Keogh. The Essential Guide to Computer Hardware. Upper Saddle River, NJ: Prentice Hall, 2002.

- [8] Geoff Koch. “Discovering Multi-Core: Extending the Benefits of Moore’s Law.” Technology@Intel Magazine.Internet: http://www.intel.com/technology/magazine/computing/multi-core-0705.pdf, July 2005 [1 April 2008].

- [8] Jim Turley. “The Two Percent Solution.” Embedded.com. Internet: http://www.embedded.com/shared/printableArticle.jhtml?articleID=9900861, 18 Dec. 2002 [1 April 2008].